I’m going to flip the script for this post, which I rarely do. I’ll start with where I think the helpful content system can go from here and then provide the why after (for those that want to better understand some background about Google’s punitive algorithm updates).

Here we go. I think Google’s helpful content system can go in a few different directions from here:

- Google could simply lessen the severity of the HCU classifier. It would still be site-wide, but sites would not be dragged down as much. It would get your attention for sure, and maybe just bad enough where you still want to implement big changes from a content and UX standpoint.

- Second, maybe the helpful content system could become more granular (like Penguin did in the past). The classifier might be applied more heavily to pages that are deemed unhelpful versus a site-wide demotion. This would of course give site owners a serious clue about which pieces of content are deemed “unhelpful”, but would also give spammers a clue about where the threshold lies (which could be a reason Google wouldn’t go down this path).

- Third, the helpful content system could remain as-is severity wise, including being a site-wide signal, but might be better at targeting large-scale sites with pockets of unhelpful content. We know this a problem across some powerful, larger-scale sites, but they haven’t really been impacted by the various HCUs since a majority of their content would NOT be classified as “unhelpful”. So, the massive drops that many smaller, niche sites witnessed could be seen by larger and more established sites if Google goes down this path.

- Fourth, maybe Google changes the power of the UX part of the HCU. For example, maybe the HCU isn’t as sensitive to aggressive and disruptive advertising moving forward. Or on the flip side, maybe Google’s increases that severity and it gets even more aggressive. I covered in my post about the September HCU(X) how Google incorporated page experience into the helpful content documentation in April (foreshadowing what we saw with the September HCU). And many sites got pummeled that had a combination of unhelpful content AND a terrible UX (often from aggressive ads). So moving forward, Google could always change the power of the UX component, which could have a big effect on sites with unhelpful content…

- And finally, Google could just keep the helpful content system running like it is now. I don’t think that will be the case based on all of the flak Google is taking about the September HCU(X), but it’s entirely possible. But, if based on the massive amount of data Google evaluates over time, if it believes that search quality is better with the helpful content system running as-is, then it won’t change a thing. I do believe we will see changes with the next HCU, but this scenario is entirely possible.

Now, if you want to learn why I think these are possible scenarios for the helpful content system moving forward, you should read the rest of my post. I cover the evolution of Google’s punitive algorithm updates, which could help site owners impacted by the various HCUs better understand why they were hit, how the previous systems evolved over time, and what they might expect moving forward.

The September HCU(X), pitchforks, torches, and screaming blood murder:

There have been many people heavily complaining about Google’s September helpful content update, how badly their sites have been hit, that no sites have recovered from the September HCU(X), and more. I totally understand their frustration, but I also think many site owners need to understand more about the evolution of previous Google’s punitive algorithm updates.

To clarify, there are algorithm updates that look to promote sites and content, and then algorithm updates that look to demote sites and content. The end result of both types of systems could look similar (big drops), but there’s a distinction between the two. And based on how previous punitive algorithm updates have evolved over time, we might have some clues about how Google’s helpful content system might evolve too.

Google’s Helpful Content System:

Before Google released the first helpful content update, I was able to get on a call with Google’s Danny Sullivan to discuss the system, the classifier, and more. Danny explained that the system would evaluate all content across a site, and if it deemed the site had a significant amount of “unhelpful content”, a classifier would be applied to the site. And that classifier could drag down ranking across the entire site (and not just the unhelpful content). So it’s a site-level classifier that could cause huge problems rankings-wise.

After hearing about the HCU on that call, I said it could be like Google Panda on steroids. The first few HCUs did not cause widespread drops, but the September HCU(X) sure did (and was Panda-like for many niche publishers). More about Panda soon.

So the helpful content system is a punitive system. It looks to punish and demote. I hate having to say it that way, but that’s the truth.

A definition of punitive:

“inflicting or intended as punishment.”

And that’s exactly what Google’s HCU does. For those not familiar with the drops many site owners are seeing after being impacted, here are just a few. It’s been catastrophic for those sites:

Yes, it’s scary stuff. It’s worth noting that I have over 700 sites documented that were heavily impacted the various HCUs and most are what I would consider smaller, niche sites. For example, a few hundred pages indexed to several thousand pages. They are often small sites focused on a very specific topic. Google’s helpful content system has placed the classifier on those sites after evaluating all content on each site and deeming a significant amount of that content “unhelpful” (or written for Search versus humans).

Recovery from the HCU:

From a recovery standpoint, from the beginning Google explained it would not be easy or quick. For example, Google explained on the call with me in August of 2022 that sites would have to prove they have significantly improved their content to be helpful and written for humans in order to recover. And in addition, Google would need to see those changes in place over months before a site could recover. I know there has been some confusion over the length of time for recovery recently, but from the start Google explained “months” and not “weeks”.

In my opinion, this is why nobody can find a single site that has recovered from the September 2023 HCU. It’s just too soon. For example, even if a site made significant changes and had those changes in place by November or December, then we are still only a few months out from the launch of the September HCU(X). That’s just not enough time from my perspective.

There have been some recoveries from previous HCUs, but they are few and far between. When checking many sites that were impacted by the August or December 2022 HCUs, the reality is not many have improved content-wise. So, that could be a big reason why many sites impacted by previous HCUs haven’t recovered. And to be honest, I’m sure Google is happy with that if the site owners built those sites for Search traffic (and monetization) versus helping humans. That’s pretty much why the helpful content system was created. Remember, it’s a punitive algorithm update. It demotes and doesn’t promote.

When Google needs to tackle a big problem in the SERPs, it gets PUNITIVE.

When I’ve spoken to site owners heavily impacted by the HCU, I found some weren’t familiar with previous Google algorithm updates that were also punitive. And after explaining the evolution of those previous punitive algorithm updates, why they were created, how they worked, and more, those site owners seemed to have a better understanding of the helpful content system overall.

Basically, when Google sees a big problem grow over time that’s negatively impacting the quality of the search results, then it can craft algorithm updates that tackle that specific problem. The HCU is no different. Below, I’ll cover a few of the more famous systems that were punitive (punishing sites versus promoting them).

First, a note about algorithm updates that REWARD versus demote:

I do a lot of work with site owners that have been heavily impacted by broad core updates. When a site is negatively impacted by a broad core update, it can be extreme for some sites (with some dropping by 60%+ as the update rolls out). But it’s important to note that broad core updates aim to surface the highest quality and most relevant content. It’s not about punishing sites, it’s about rewarding them (although if you end up on the wrong side of a core update, it can look like a punishment.)

The good news is that if you’ve been heavily impacted by a broad core update due to quality problems, you can definitely recover. For example, if a site has significantly improved quality over time, then it can see improvements during subsequent broad core updates. It’s also worth noting that Google’s Paul Haahr explained to me at the Webmaster Conference in 2019 that Google could always decouple algorithms from broad core updates and run them separately. We’ve seen that happen a number of times over the past several years, but again, sites typically need another core update before they can see recovery.

And then there is Google’s reviews system. That also promotes and doesn’t demote. But again, if you’re on the wrong side of the update, it can look like a heavy punishment. Many companies have reached out to me after getting obliterated by a reviews update. You can read my post about the long-term impact of the reviews system to learn more about how many sites have fared since those updates rolled out. Hint, it’s not pretty…

Also, as of the November 2023 reviews update, the reviews system is now “improved at a regular and ongoing pace”. So, Google will not announce specific reviews updates anymore. That was a big change with the November 2023 reviews update (which might be the last official update we hear about). The move reminded me of when Panda got baked into Google’s core ranking algorithm in 2016, never to be heard of again. I’ll cover more about Panda soon.

Now I’ll cover some previous algorithm updates that were punitive. And if you’ve been impacted by an HCU, certain aspects of each update might sound very familiar.

Google Penguin:

In 2012, Google finally had enough with sites spamming their way to the top of the search results via unnatural links. The gaming of PageRank was insane leading up to 2012 and Google had to do something about it. So it crafted the Penguin algorithm, which heavily demoted sites that were spamming Google via unnatural links. It was a site-level demotion and caused many sites to plummet rankings-wise. Many site owners went out of business after being impacted by Penguin. It was a nasty algorithm update for sure.

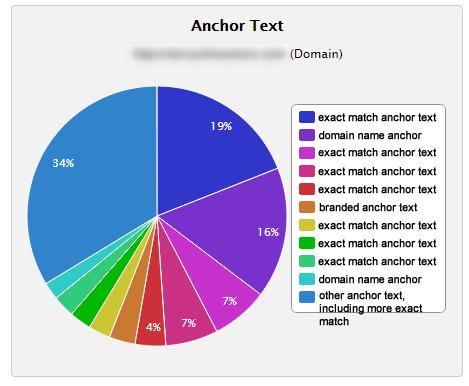

And just like the HCU, drops from Penguin were extreme. Some sites plummeted by 80-90% overnight and were left trying to figure out what to do. You can read my post covering Penguin 1.0 findings after I heavily dug in to sites affected. It didn’t take long to figure out what was being targeted. The link profiles of sites impacted were filled with spammy exact match and rich anchor text links. And when I say filled, I mean FILLED.

Here is an example of anchor text distribution from a Penguin victim back in the day. It’s completely unnatural:

I received many calls from site owners completely freaking out after getting hit. One of the most memorable calls involved a site owner frantically asking me to check out the situation for him. I quickly asked for the domain name so I could check the link profile. He said, “what do you mean, I have 400 sites you need to check.” Yep, he had 400 one-page websites link spammed to all hell, all ranking at the top of the search results, and many interlinked. He was earning a good amount of money per month from that setup, which dropped to nearly $0 overnight. Penguin 1.0 was a very bad day for his business.

Here is a drop from Penguin 1.0. Again, the drops were extreme just like the HCU:

And here is a drop from Penguin 2.1. Search visibility plummets when the update rolls out:

Recovery-wise, there weren’t many over time. Sounds a lot like the HCU, right? Why weren’t there many recoveries? Well, if most of a site’s links were spam, then it was hard to remove all of those links, and even if you did, you were left with a handful of links that actually counted. The disavow tool wasn’t released until October of 2012, so site owners impacted by Penguin before then were frantically trying to remove spammy links, have them nofollowed, or in a worst-case scenario, remove the pages on their sites receiving those links. It was a complete mess.

So for those complaining about the HCU, you should know Penguin was not much better. It was punitive and punished many site owners for gaming Google’s algorithms. Google didn’t care if those sites recovered quickly. Actually, Penguin updates were often spread so far apart that it was clear Google didn’t care at all. There was nearly a two-year gap between Penguin 3.4 and 4. Yes, nearly two years. Also, Penguin did not run continually until December of 2014, so you required another Penguin update to recover until then. And again, most didn’t recover from being hit.

The entire situation got so bad that Google decided to flip Penguin on its head and just devalue spammy links versus penalizing them. That’s what Penguin 4.0 was all about, and it was an important day in the evolution of Penguin. And soon after, you could see the Penguin suppression released across many sites. It was pretty wild to see. By the way, if I remember correctly, Google’s Gary Illyes was instrumental in the move to Penguin 4.0 (devaluing versus penalizing). I’m not sure he got enough credit for that in my opinion.

Here is a tweet of mine showing search visibility changes based on Penguin 4.0 rolling out:

So Penguin was a nasty and punitive algorithm update that caused many problems for site owners impacted. It put many sites out of business, although it did take a huge bite out of the unnatural links situation. So my guess is that Google saw it as successful. And that move to devaluing versus penalizing has progressed to SpamBrain now handling unnatural links (ignoring junky, spammy links across the web). It’s one of the reasons I’ve been extremely vocal that 99.99% of site owners do not need to disavow links (unless they knowingly set up unnatural links, paid for links, or were part of a link scheme). If not, let SpamBrain do its thing and step away from the disavow tool.

Penguin’s Evolution:

- Punitive algorithm heavily demoting sites that were gaming unnatural links.

- First moved to running continuously after requiring a periodic refresh (which could be spaced out quite a bit, even years).

- Move to devaluing links versus penalizing them in 2016 with Penguin 4.0 (a huge shift in how Penguin worked).

- Google retired the Penguin system and I assume parts of it were baked into SpamBrain, which now handles unnatural links (by neutralizing them).

Google Panda:

Before Google Penguin hit the scene, Panda was the big algorithm update that everyone was talking about. Launched in February 2011, it’s goal was demote sites with a lot of thin and low-quality content. The algorithm was crafted based on a growing problem, which was fueled by content farms. Many sites were pumping out a ton of extremely low-quality and thin content, which ended up ranking well. And beyond just thin content, those pages were often littered with a ton of ads. Or worse, the articles were split across 30 paginated pages that were full of ads.

Google knew it needed to do something. And Panda was born. Here is a quote from the original blog post announcing the algorithm update:

“This update is designed to reduce rankings for low-quality sites—sites which are low-value add for users, copy content from other websites or sites that are just not very useful.”

I was able to jump right into Panda analysis since a new publishing client of mine at the time had 60 sites globally (and ~30 got hit hard by the first Panda update). I was surfacing all types of low-quality and thin content across those sites and was sending findings through at a rapid pace to my client.

Here’s an example of a big Panda hit. This site dropped by about 60% overnight…

Although Panda hits could be huge, the good news was that Panda updates typically rolled out every 6-8 weeks (and you technically had an opportunity to recover with each subsequent update if you had done enough to recover). I helped many companies recover from Panda updates over time, and from across many verticals. So although Panda was a punitive algorithm update, many sites did recover if they implemented the right changes to significantly improve quality over time.

Here is an example of a big Panda recovery, with the site surging after working hard on improving quality over time. So again, recovery was entirely possible.

That’s different from the HCU (at least so far), but again, many of the sites impacted by the HCU were smaller, niche sites. They don’t have a lot of content overall, and in my opinion, many need to completely revamp their content, user experience, cut down on aggressive ads, etc.

Panda was eventually changed in 2013 and first started rolling out monthly, and slowly, over a ten-day period. That signaled more larger changes to come, and that finally happened in 2016 when Panda was baked into Google’s core ranking algorithm, never to be seen again. I’m sure pieces of Panda are now part of Google’s broad core updates, but medieval Panda is not roaming the web anymore (in its pre-2013 form). Also, and this was interesting to learn about, Google’s HJ Kim explained to Barry Schwartz that Panda evolved to Coati at some point (and is part of Google’s core ranking algorithm now). By the way, Danny Goodwin just published a great post looking back on Panda (since we just passed 13 years since it launched).

Panda’s Evolution:

- Punitive algorithm that could heavily demote sites with a lot of low-quality or thin content.

- Moved to rolling out monthly, and slowly, over a 10-day period in 2013.

- Panda was eventually baked into Google’s core ranking algorithm in 2016, never to be seen again.

- Panda evolved to Coati in January of 2016 and is still part of Google’s core ranking algorithm (although not what medieval Panda used to be like).

- Google retired the Panda system and handles lower-quality content via other ways (one of which is via the helpful content system).

Circling back to the helpful content system: Where could it go from here?

OK, I started the post with where I thought the helpful content system could go from here, then I covered punitive algorithm updates, and now I’ll circle back to the helpful content system again. Now that you know more about why Google needs to go punitive sometimes, and how it has done that in the past, let’s review the original bullets again.

In my opinion, here are some scenarios covering where the helpful content system can go from here. I am simply providing the bullets from the beginning of this article:

- Google could simply lessen the severity of the HCU classifier. It would still be site-wide, but sites would not be dragged down as much. It would get your attention for sure, and maybe just bad enough where you still want to implement big changes from a content and UX standpoint.

- Second, maybe the helpful content system could become more granular (like Penguin did in the past). The classifier might be applied more heavily to pages that are deemed unhelpful versus a site-wide demotion. This would of course give site owners a serious clue about which pieces of content are deemed “unhelpful”, but would also give spammers a clue about where the threshold lies (which could be a reason Google wouldn’t go down this path).

- Third, the helpful content system could remain as-is severity wise, including being a site-wide signal, but might be better at targeting large-scale sites with pockets of unhelpful content. We know this a problem across some powerful, larger-scale sites, but they haven’t really been impacted by the various HCUs since a majority of their content would NOT be classified as “unhelpful”. So, the massive drops that many smaller, niche sites witnessed could be seen by larger and more established sites if Google goes down this path.

- Fourth, maybe Google changes the power of the UX part of the HCU. For example, maybe the HCU isn’t as sensitive to aggressive and disruptive advertising moving forward. Or on the flip side, maybe Google’s increases that severity and it gets even more aggressive. I covered in my post about the September HCU(X) how Google incorporated page experience into the helpful content documentation in April (foreshadowing what we saw with the September HCU). And many sites got pummeled that had a combination of unhelpful content AND a terrible UX (often from aggressive ads). So moving forward, Google could always change the power of the UX component, which could have a big effect on sites with unhelpful content…

- And finally, Google could just keep the helpful content system running like it is now. I don’t think that will be the case based on all of the flak Google is taking about the September HCU(X), but it’s entirely possible. But, if based on the massive amount of data Google evaluates over time, if it believes that search quality is better with the helpful content system running as-is, then it won’t change a thing. I do believe we will see changes with the next HCU, but this scenario is entirely possible.

What changes will the next HCU bring? Only Google knows…

We are expecting another helpful content update (HCU) soon, so it will be interesting to see what the impact looks like, how many sites recover, if larger sites are impacted, and more. Again, there are many sites impacted by previous HCUs looking to recover so many are eagerly anticipating the next update. That’s when site owners will find out if they have they done enough to recover, or if a system refinement enables them to recover. I’ll be watching closely, that’s for sure.

GG