This edited extract is from Digital and Social Media Marketing: A Results-Driven Approach edited by Aleksej Heinze, Gordon Fletcher, Ana Cruz, Alex Fenton ©2024 and is reproduced with permission from Routledge. The extract below was taken from the chapter Using Search Engine Optimisation to Build Trust co-authored with Aleksej Heinze, Senior Professor at KEDGE Business School, France.

The key challenge for SEO is that good rankings in SERPs are almost entirely based on each search engine’s private algorithm for identifying high-quality content and results, which is a long-term activity.

The initial formula of PageRank (Page et al. 1999) used by Google, which used links pointing to a page to rank its importance, has evolved significantly and is no longer publicly available.

All search engines regularly update their algorithms to identify high-quality, relevant content to a particular search query. Google implements around 500 – 600 changes to its algorithm each year (Gillespie 2019).

These are product updates, similar to Windows updates. Most of these changes are minor with little impact, but a few critical core updates each year will require careful review on the majority of websites since they can result in major SERP changes.

Search engines are using artificial intelligence to improve their technology to enable them to identify high-quality, relevant content and are constantly testing new ways to present users with relevant content.

The arrival of ChatGPT by Open AI in 2022 presents a rival type of offering that has shaken the foundations of the traditional search engine business model (Poola 2023).

In such a dynamic environment, it is important to keep up to **** with algorithm changes.

This can be done by following the Google Search Status dashboard (Google) and SEO-related blog posts and monitoring, including the MOZ algorithm change calendar (Moz).

How Search Engines Work

In essence, a search engine’s crawler, spider, robot or ‘bot’ discovers web page links, and then internally determines if there is value in analysing the links.

Then, the bot automatically retrieves the content behind each link (including more links). This process is called crawling.

Bots may then add the discovered pages to the search engines’s index to be retrieved when a user searches for something.

The ranking order in which the links appear in SERPs is calculated by the engine’s algorithm, which examines the relevance of the content to the query.

This relevance is determined by a combination of over 200 factors such as the visible text, keywords, the position and relationship of words, links, synonyms and semantic entities (Garg 2022).

When the user of a search engine types in a query, they are presented with a list of links to content that the engine calculates will satisfy the intent of the query – the list of results is the SERP.

Typically, the list of results that are shown in SERPs includes a mix of paid-for and organic results. Each link includes a short URL, title and description, as well as other options such as thumbnail images, videos and other related internal site links.

Search engines are constantly making changes to SERPs to improve the experience for those searching. For example, Bing includes Bing Chat, allowing responses to be offered by their AI bot.

Google introduced a knowledge graph or a summary answer box, found underneath the search box on the right of the organic search results.

The Bing Chat as well as Google knowledge graph provide a direct and relevant summary response to a query without the need for a further click to the source page (and retaining the user at the search engine).

This offering leads to so-called 0-click searches, which cannot be tracked in the data relating to a digital presence and are only seen in data that relates content visibility to SERPs.

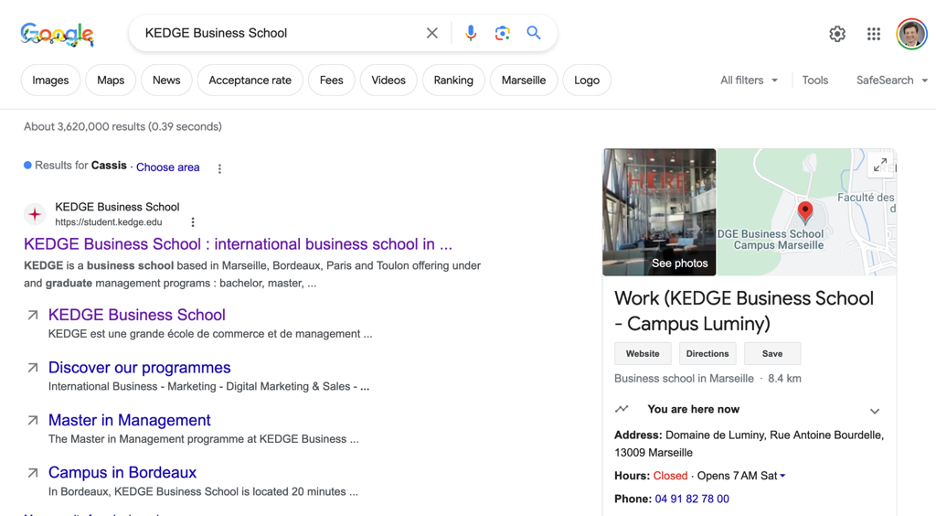

Some Google SERP snippets can also appear as a knowledge graph (Figure 12.8) or a search snippet (Figure 12.9).

Figure 12.8: Google SERP for “KEDGE Business School” including a knowledge graph on the right-hand side of the page.

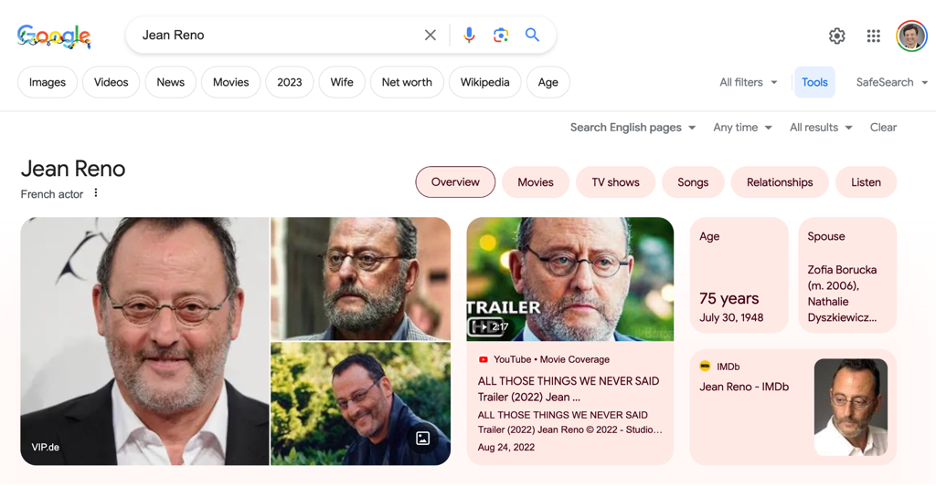

Figure 12.8: Google SERP for “KEDGE Business School” including a knowledge graph on the right-hand side of the page. Figure 12.9: Search snippet for Jean Reno.

Figure 12.9: Search snippet for Jean Reno.The volatility of the SERPs can be evidenced by the varying results produced by the same search in different locations.

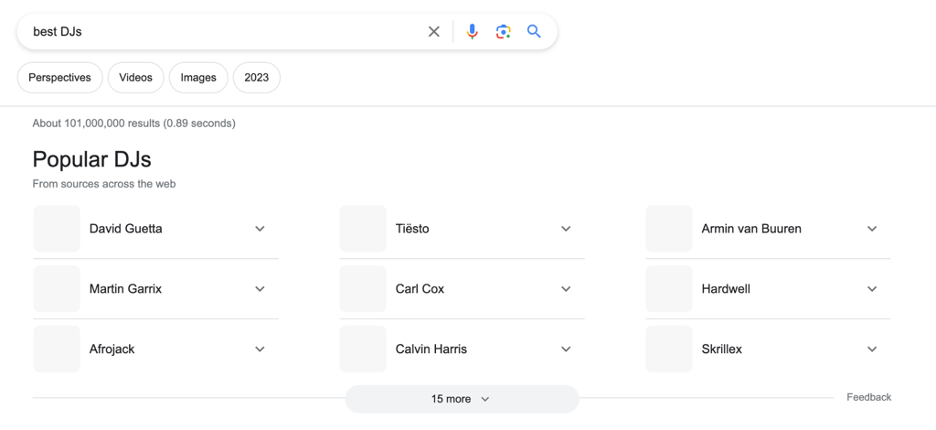

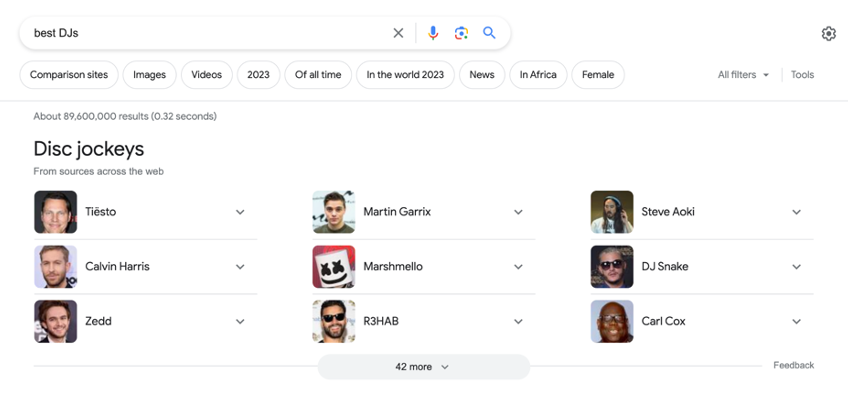

The listing for the US market (Figure 12.10) and carousel for the European market (Figure 12.11) for “best DJs” shows that geolocation increasingly comes into play in the page ranking of SERPs.

Personalisation is also relevant. For example, when a user is logged into a Google product, their browser history influences the organic SERPs. SERPs change depending on what terms are used.

This means a pluralised term produces different SERPs to searches that use the singular term.

Tools, such as those offered by Semrush, include functionality to quickly identify this form of volatility and understand sectors that are being affected by changes.

Figure 12.10: US results for “best DJs”

Figure 12.10: US results for “best DJs” Figure 12.11: European results for “best DJs”

Figure 12.11: European results for “best DJs”Recent innovations by Google include the search generative experience (SGE) currently being tested in the US market. This is a different search experience that is more visual and uses artificial intelligence.

The 2015 introduction of RankBrain and other algorithms means that Google now better understands human language and context.

Industry publications, including Search Engine Roundtable and Search Engine Land, keep pace with this dynamic landscape.

Implementing Search Engine Optimisation

Identification of the most relevant search terms is the starting point for developing a website map and themes for content.

The search terms will also define the focus for individual pages and blog posts. This approach has a focus on the technical/on-page, content, and off-page aspects of the website.

Any SEO activity begins with prior knowledge of the organisation, including its objectives and targets as well as the persona that has been defined.

The initial phase of optimising a website for Google search involves:

- A technical and content audit.

- Keyword identification and analysis.

- Implementing any changes in the content management system (CMS) and content.

- Using the secure HTTPS protocol for the website.

- Submitting the website to Google Search Console.

- Submitting the website to Bing Webmaster Tools.

- Submitting the website to other appropriate search engines.

- Adding website tracking code such as Google Analytics, Hotjar or others to the website.

Summary

SEO plays a critical role in enhancing an organisation’s digital presence, and the dynamic nature of search engine algorithms provides a way to address the immediate pain touchpoints of a persona.

This focused around the imperative for organisations to offer content that not only resonates with a persona’s needs but also aligns with the evolving criteria of search engines like Google, Baidu or Bing.

This latter alignment is crucial given the stakeholder tendency to focus only on the first SERP. It is important to adhere to ethical SEO practices employing ‘White Hat SEO’ tactics that comply with search engine guidelines, as opposed to more manipulative techniques.

There is a need for continuous monitoring and reviewing of any SEO activities.

Frequently changing search engine algorithms, which now heavily incorporate AI and machine learning, means that a campaign’s parameters can change quickly. SEO is not a “set and forget” activity.

Staying informed and adapting to these changes is essential for maintaining and improving search engine rankings.

The environmental impact of digital activities should also be a consideration in SEO and wider marketing practices, optimising websites not only aligns with SEO best practices but also contributes to sustainability.

Search engines offer marketers one of the largest big data sets available to refine and target their content creation activities.

Historic search behaviours are good predictors of the future, and the use of these resources helps marketers to optimise and be better placed to offer value to their persona.

To read the book, SEJ readers have an exclusive 20% discount until the end of 2024 using the code DSMM24 at Routledge.

The book officially launches on October 7 2024 and you can attend the event with a chance to hear from some of the authors by registering through this link.

More resources:

Featured Image: Sutthiphong Chandaeng/Shutterstock

Source link : Searchenginejournal.com