This month’s items are a real toolkit for dedicated SEOs. Many of them focus on areas that aren’t often covered. That means you may catch your competitor’s sleeping.

It starts with a pair of detailed guides. First, you’ll learn why some feel that web design and UI matter more in 2020. Then, you’ll get some advice on how to pitch to the press like a pro.

After that, here comes your data fix in the form two chunky case studies. Is your keyword traffic tool giving you good data? Does the top search result just keep getting lower? Numbers don’t lie, but you may want to interpret the data for yourself.

Finally, we cover the news. Don’t miss Google’s response to a Moz post or some fresh tips on how to future-proof a content site. Finally, catch two dispatches from Google on how they treat nofollow links and whether to worry if your content is being scraped.

Let’s dive in. First, we’ll look at why one SEO is arguing that web design and UI matter more than ever in 2020, and what changes may be necessary to keep up.

This is why web design and UI matter for SEO in 2020

https://seobutler.com/web-design-ui-ux-seo/

This article begins with an interesting note—

“Google Search Console now throws errors for elements of design and has even partnered up with https://material.io/ to help guide and influence your design.”

This much can be confirmed…

“Google is getting better at detecting ugly websites, and they want you to know it.”

What it means for the future is another question, but this author theorizes that following Google’s own design choices will lead you in the right direction.

For example, the first element discussed in the guide is ‘white space’. This is a very old design term that refers to the amount of empty space in a complete image (in our case, a web page).

The author points out that Google’s own research from the early days shows that they discovered white space conveys trust and authority. They reserve tons of room for it on all their services, including (most notably) Google.com.

Similar arguments are applied to other design elements throughout the article, including typography, tone, direction, and headings. The author does an impressive amount of work connecting each suggestion to Google’s guidelines or other work they’ve published.

Does that mean applying these suggestions can help you rank? Not necessarily, and that claim isn’t made. However, it is fairly pointed out that these design choices all work to the benefit of human readers. Doing a better job of that rarely goes wrong in the long run.

While your mind is still on how to present yourself better, let’s look at a more direct guide. This one claims to teach you how to pitch to the press like a PR Pro.

How to Pitch the Press Like a PR Pro

https://www.canirank.com/blog/how-to-pitch-journalists/

If building higher-quality links is one of your big goals this year, you should be considering the fertile ground of major news sites. News sites such as the New York Times, Vox, and Wall Street Journal have massive amounts of authority.

Naturally, journalists aren’t looking for your average blog fare. If you want those links, you need to approach them the right way and offer the right kind of value. This guide claims that it can help you do that.

Source : https://www.canirank.com/

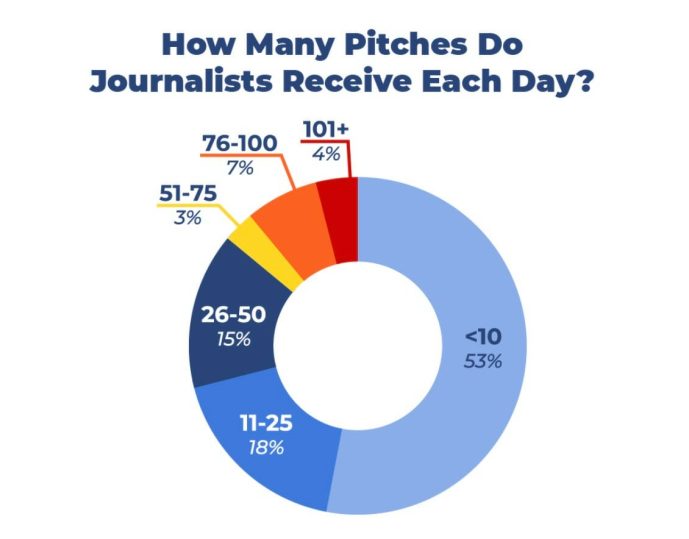

It takes you through the journalists’ mindset, complete with data about how many pitches the average journalist gets (as many as 100), and surveys about what practices annoy them the most (having no clue what subjects they report on).

That’s followed by a step-by-step section where the guide teaches you to develop hooks designed for journalists and to put the focus on your credibility. This is illustrated with an example pitch near the end. It has all the elements you need marked for easy practice.

Now that you’ve picked up some new tricks, let’s look at our collection of case studies for the month. First, we’ll take a look at the big keyword research tools and what the data says about where each one stands this year.

Large Scale Study: How Data From Popular Keyword Research Tools Compare

https://backlinko.com/keyword-research-tool-analysis

Better keyword research is an important part of competing for business online.

Is your favorite tool starting to lose its edge? This study by Backlinko takes a look at where some of the biggest names have changed.

The study looks at a long list of popular tools:

- Google Keyword Planner

- Ahrefs

- SEMrush

- Moz Pro

- KeywordTool.io

- KWFinder

- LongTailPro

- SECockpit

- Sistrix

- Ubersuggest

If you’re looking for a clear winner, you won’t really find it in this article. The emphasis is more on the specific ways that each one stands out.

There might be more variation than you’d imagine. The quality and volume of automated keyword suggestions received a lot of focus. The advantage in that category went to paid apps.

In other cases, it wasn’t immediately clear who had the advantage. The different tools appear to use wildly different calculations to estimate measures like keyword difficulty scores and CPC costs.

In the final case study of the month, we’ll look at a more measurable metric. This one is so precise you can measure it with a ruler—just don’t be surprised if you don’t like the answer.

How Low Can #1 Go? (2020 Edition)

https://moz.com/blog/how-low-can-number-one-go-2020

From the earliest days of Google, SEOs have chased after the glory of the top SERPs spot. According to this case study by Moz, the top isn’t as lofty as it used to be.

In fact, the ‘top’ result may take you 3-4 scrolls down the page to even locate. This, as the study points out, has a lot to do with the introduction of sections that have replaced the first organic result.

Ads, featured Snippets, local packs, and video carousels have all played a role in forcing organic results further down the page.

How much further? According to Moz, by as much as 2800px. That’s longer than most people want to spend scrolling.

Of course, the numbers aren’t as bad as 2800px for most searches. Queries that don’t typically return video results are far more likely to have organic searches higher up.

However, as Moz points out, this is a trend.

Compared to the last time they performed this experiment, the worst examples here were about 3x worse than the worst examples in 2012.

Is that the whole story? Google says no. In the first news item of the month, we’ll be looking at their response to this very case study.

Google’s Response to Moz Article Critical of SERPs

https://www.searchenginejournal.com/google-challenges-moz-article/352078/

So, Google was not entirely pleased with the results of that last item. They came out shortly afterward with a response seeking to clarify a few matters.

Google begins with the argument that there isn’t a distinction between old-style organic results and new types such as the featured snippet. Both earn their position organically through the algorithm.

Furthermore, they argue, these new forms represent more effective ways of addressing user intent that weren’t possible before.

Moz used “Lollipop” as an example of a search that didn’t have any organic results until the 2000px mark. They made this claim on the basis that everything from videos, songs, and lyric results were stacked before the organic result.

Google claims that example just proves how dynamic other forms of results have become. They insist that the “clutter” that appears above organic results has a better chance of meeting searcher needs.

Maybe you find one argument more compelling than the other. It’s true that results have more functionality than before. However, it’s also true that Google’s recent changes have come at a loss to organic results in more ways than the one discussed here. Let’s leave it there.

Next up, a neat bit of analysis into how to future-proof a content site investment.

Future-Proofing A Content Site Investment

https://onfolio.co/future-proofing-a-content-site-investment/

This piece was a little too hypothetical to be placed among the guides, but I know the ideas discussed here are right up the alley of a lot of affiliate SEO marketers.

The author has some fresh theory on how you can maintain the value of existing sites if their original focus becomes less lucrative. You can create a website that doesn’t leave you too reliant on one type of traffic or revenue, and you can do it with affordable, devalued domains.

The plan is to create informational content on the sites that were originally designed for affiliate needs. Visitors to most affiliate sites don’t come back. They come in off a link, convert once (if you’re lucky), and then leave. That’s an insecure form of traffic over the long-term.

However, you can earn more (and different) traffic by developing tailored informational content to keep those buyers coming back.

Informational content drives the repeat visitors that Google considers so important. To attract them, you have to get closer to understanding the needs of the customer who you’ve attracted to the affiliate product.

The article uses the example of drones. If you can attract someone to a site for an affiliate sale of a drone, you may be able to make a regular visitor out of them by appealing to their relationship to that hobby.

So, for the drone, you would want to create hubs of content that met the needs of one of the following:

- Hobbyists

- Casual flyers

- Hikers

- Photographers (and there are lots of different groups within this group)

- Construction industry

- Drone racers

This way, you can turn one-timers into fans. The guide has a lot more detail and is worth a longer look if you need a new way forward for your site.

As long as you’re making some minor updates, you should make sure you’ve reviewed Google’s latest on how to treat nofollow links.

Google’s new treatment of nofollow links has arrived

https://searchengineland.com/googles-new-treatment-of-nofollow-links-has-arrived-329862

On March 1st, the rules for nofollow changed. Understand that, from now on, nofollow will be considered to be a hint rather than a rule that a crawler is forced to follow.

This doesn’t mean that a lot has changed. Nofollow is still applied where you left it, and—unless you feel very strongly about Google not crawling a given page—it doesn’t require any immediate action from you.

Perhaps the most substantial change is that link juice is now going to start flowing to places where it didn’t in the past. If you’ve made heavy use of nofollow on older sites, it’s worth it to keep an eye on the data over the next couple of months.

There are also some new attributes that you can use if you want to send a more specific message to Google. These attributes are a lot more specific to intent.

rel=”sponsored”: Used to identify links on your site that are advertisements, sponsorships, or other paid agreements.

rel=”ugc”: Recommended for links appearing in user-generated content (For example, comments and forum posts).

rel=”nofollow”: Use in any scenario you want to link to a page but don’t want to pass along ranking credit to it.

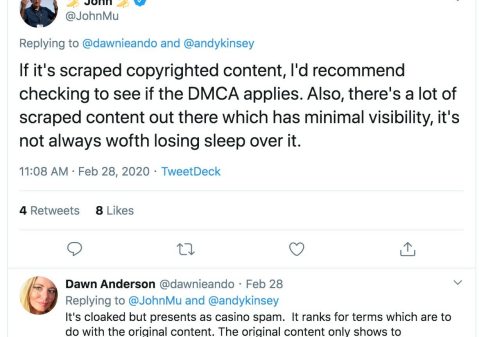

Now let’s move on to Google’s next big message of the month: You don’t have to lose sleep over others scraping your content.

Google: Don’t Lose Sleep Over Others Scraping Your Content

https://www.seroundtable.com/google-dont-lose-sleep-over-stolen-content-29078.html

It was once widely believed that having scraped content could come back to bite you. Should you be worried about losing ranks to your own content on other websites?

John Mueller says ‘no’, though without much detail. He argues that scraped content mostly has limited visibility. Additionally, there is rarely much risk of being outranked by someone else unless your site has been penalized for another reason.

This isn’t a complete answer, of course. There are indeed blackhat cases where real websites managed to scan someone’s RSS feed and steal new content before it was indexed by GSC.

This is deeply frustrating, but there is something you can do in cases like those: Request GSC indexing immediately after publishing.

Got Questions or Comments?

Join the discussion here on Facebook.