I hope that you’ve never had to go through the pain of being hit by an algorithmic update.

You wake up one morning, your traffic is decimated, and your rank tracker is littered with red arrows.

Algorithmic penalties are not a subject I like to trivialize, that’s why the case study I am about to share with you is different than most you’ve read before.

This case study is a testament of faith and hard work by my agency, The Search Initiative, in light of a huge shift in the SEO landscape.

Unfortunately, with core algorithmic updates you can’t simply change a few things and expect to get an immediate ranking recovery.

The best you can do is prepare for the next update round.

If you’ve done all the right things, you experience gains like you’ve never seen before.

Even if you’ve never been hit with an algorithmic penalty, you should care about these updates.

Even if you’ve never been hit with an algorithmic penalty, you should care about these updates.

Doing the right things and staying one step ahead can get your site in position for huge gains during an algorithm roll out.

So what are “the right things”? What do you need to do to your website to set it up for these types of ranking increases when the algorithms shift?

This case study from my agency The Search Initiative will show you.

The Challenge: “Medic Algorithm” Devaluation

I want to start this case study by taking you back to its origins.

There was a big algorithm update on the 1st of August 2018. A lot of SEOs called it a “Medic Update” because it targeted a huge chunk of sites related to health and medicine.

https://www.seroundtable.com/google-medic-update-26177.html

What Does an Algorithm Update Look Like?

Let’s start with a few facts.

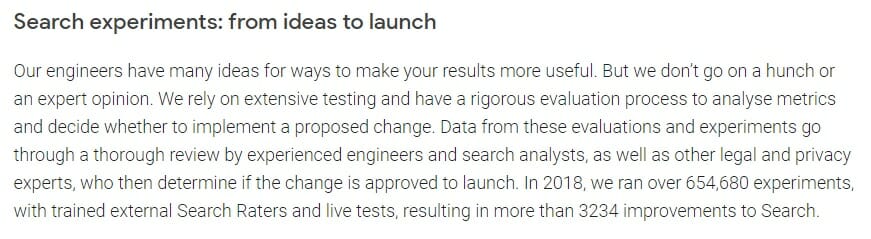

Fact #1: Google is constantly running search experiments.

To quote Google from their official mission page:

“In 2018, we ran over 654,680 experiments, with trained external Search Raters and live tests, resulting in more than 3234 improvements to Search.”

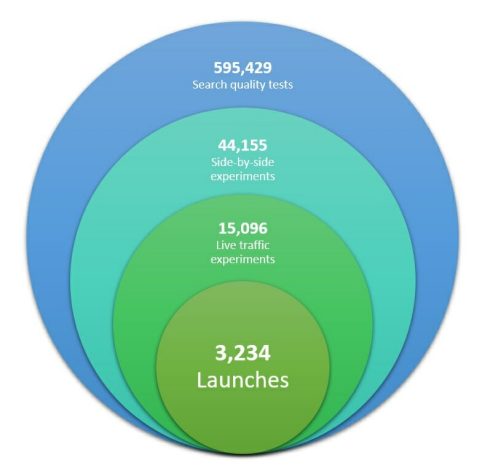

Here are the official numbers relating to the search experiments they ran last year:

Here are the official numbers relating to the search experiments they ran last year:

- 595,429 Search quality tests – this is the number of tests they have designed to run in the search engines. Some of them were only conceptual and were algorithmically proven to be ineffective, therefore these never made it to the next testing stages.

- 44,155 Side-by-side experiments – this is how many tests they have run through their Search Quality Raters. The SQR team looks at the search results of old and new algorithms side-by-side. Their main job is to assess the quality of the results received, which, in turn, evaluates the algorithm change. Some changes are reverted at this stage. Others make it through to the Live traffic experiments.

- 15,096 Live traffic experiments – at this stage, Google is releasing the algorithm change to the public search results and assesses how the broader audience perceives them, most likely through A/B testing. Again, there will be some rollbacks and the rest will stay in the algorithm.

- 3,234 Launches – all the changes that they rolled out.

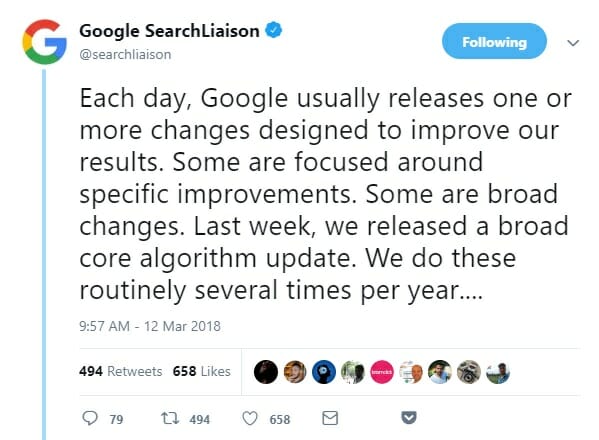

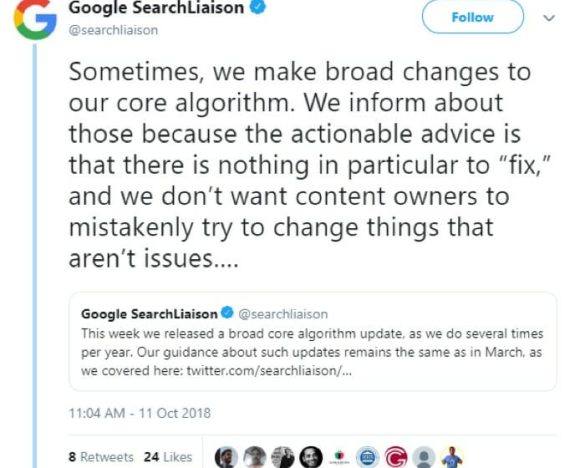

Fact #2: Google releases algorithm improvements every day and core updates several times a year!

Bearing in mind everything said above, Google releases algo improvements basically every day.

Do the math…

3,234 releases a year / 365 days in a year = 8.86 algo changes a day!

They’ve also confirmed that they roll-out core quality updates several times per year:

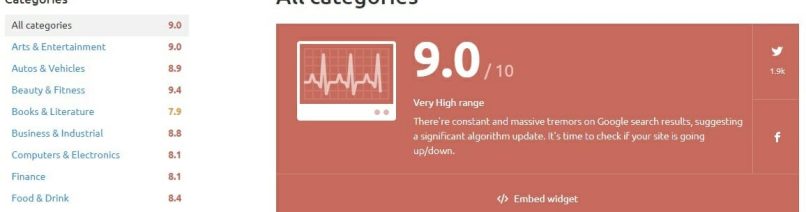

When you suspect something is going on, you can confirm it by simply jumping over to your favorite SERP sensor to check the commotion:

https://www.semrush.com/sensor/

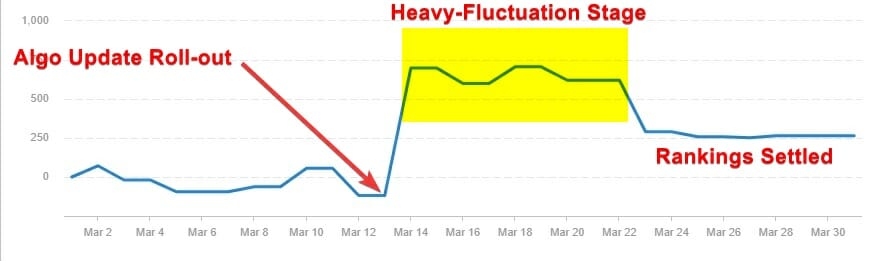

During this period, rankings typically fluctuate and eventually settle. Like in the below screenshot:

A lot of SEOs (myself included) believe that during the Heavy-Fluctuation Stage, Google is making adjustments to the changes they’ve just rolled out.

It’s like while you’re cooking a soup.

First, you add all the ingredients, toss in some spices, and let it cook it for some time.

Then you taste it and add more salt, pepper or whatever else that is needed to make it good.

Finally, you settle with the taste you like.

(I’ve never actually cooked soup other than ramen, so hopefully, this analogy makes sense.)

Fact #3: There will initially be more noise than signal.

Once there is an algo update, especially an officially confirmed one, many budding SEOs will kick into overdrive writing blog posts with theories of what particular changes have been made.

Honestly, it’s best to let things settle before theorizing:

One strength we have as website owners is that there are lots of us – and the data that is collected by webmasters on forums and on Twitter is sometimes enough to give an indication of what changes you could possibly make to your sites.

However, this is not usually the case, and when it is, it is usually difficult to tell if what the webmasters are signaling is actually correct.

Keep an eye on those you trust to give good advice.

That said…

At this stage, there are more rumors, urban legends and people wanting to show off – all contributing to the noise than actually any reasonable advice (signal).

At my agency, we always gather a lot of data and evidence first, before jumping any conclusions… and you should do the same.

Very shortly, we’ll be getting to that data.

The Question: Algorithmic Penalty or Devaluation?

When things go wrong for you during an algorithmic update, a lot of SEOs would call it an “algorithmic penalty”.

At The Search Initiative, we DO NOT AGREE with this definition!

In fact, what it really is, is a shift in what the search engine is doing at the core level.

Put it in very simple terms:

- Algorithmic Penalty – invoked when you’ve been doing something against Google’s terms for quite some time, but it wasn’t enough to trigger it until now. It’s applied as a punishment.

- Algorithmic Devaluation – usually accompanying a quality update or a broad algorithm change. Works at the core level and can occasionally influence your rankings over a longer period of time.Applied as a result of the broader shift in the quality assessment.

Anyway, call it as you want – the core algo update hitting you means that Google has devalued your site in terms of quality factors.

An algorithmic shift affecting your site should not be called a penalty. It should be viewed as a devaluation.

You were not targeted, but a bunch of factors have changed and every single site not in compliance with these new factors will be devalued in the same way.

The good thing about all this… once you identify those factors and take action on them, you’ll be a great position to actually benefit from the next update.

How to Know You’ve Been Hit by an Algo Update?

In some cases, a sudden drop in traffic will make things obvious, such as this particular site that I would like to look at more specifically.

But we’ll get to that in a second.

Generally speaking, if your traffic plummets from one day to the next, you should look at the algorithm monitoring tools (like the ones below), and check Facebook groups and Twitter.

Google Algorithm Change Monitors:

Useful Facebook Groups:

Useful Twitter Accounts to Follow

The Patient: Our Client’s Site

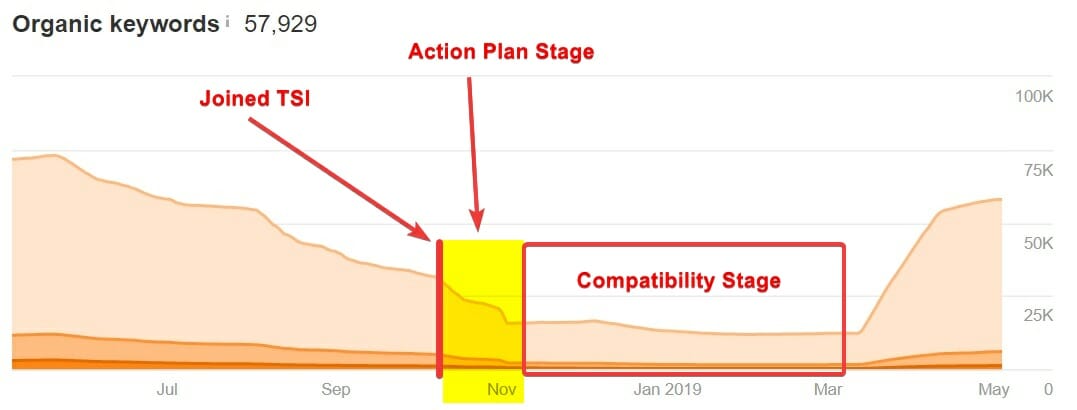

The client came on board as a reaction to how they were affected by the August update.

They joined TSI towards the end of October.

This was the ‘August 2018 Update’ we were talking about – and still no one is 100% certain of the specifics of it.

However, we have some strong observations. 😉

Type of the Site and Niche

Now, let’s meet our patient.

The website is an authority-sized affiliate site with around 700 pages indexed.

Its niche is based around health, diet and weight loss supplements.

The Symptoms

As the industry was still bickering, there were no obvious ‘quick fixes’ to this problem.

In truth, there likely will never again ever be any ‘quick fixes’ for broad algo updates.

All we had to work with was this:

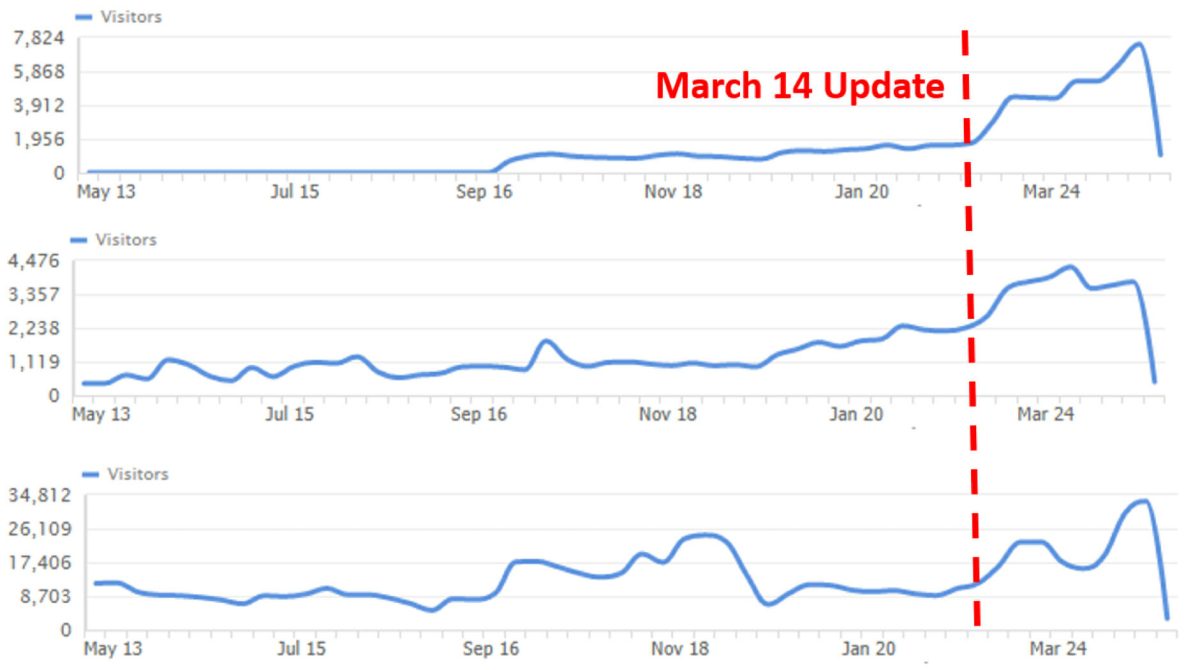

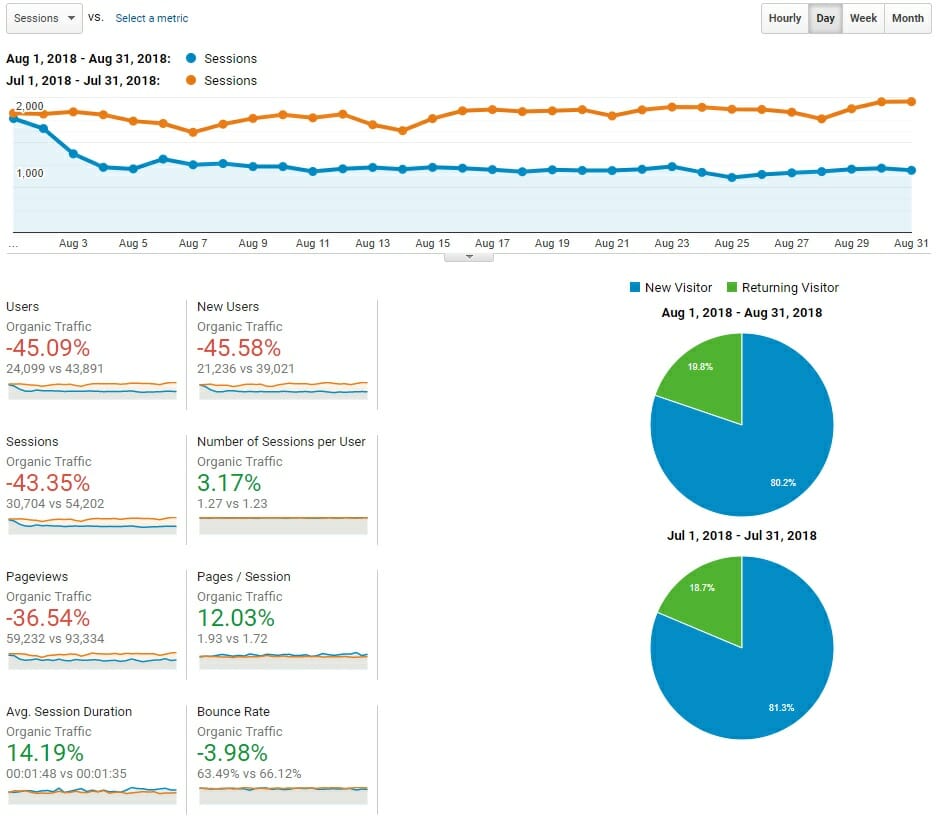

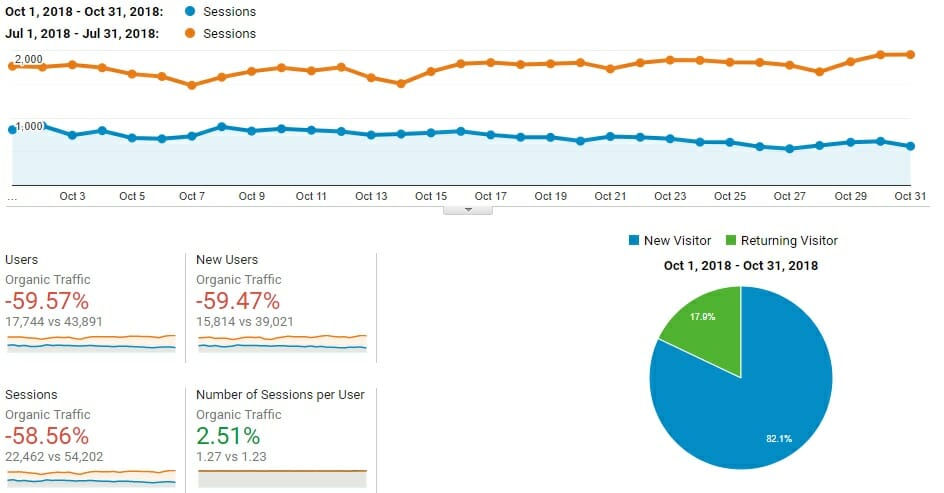

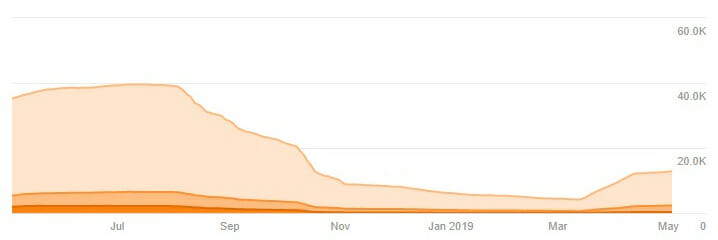

You can see that in this particular case, the number of users visiting the site dropped by 45% in July-August.

I will rephrase myself: Half of the traffic gone in a week.

If we look at October, when we’re running all our analyses and creating the action plan, the organic traffic looks even more pessimistic:

With the niche, site and timeline evidence, we could easily conclude what follows:

100% Match with The “Medic” Update

How We Recovered it – What are the “right things”?

To contextualize our decision making on this project, this is a rundown of what we know and what we knew then:

What we knew then

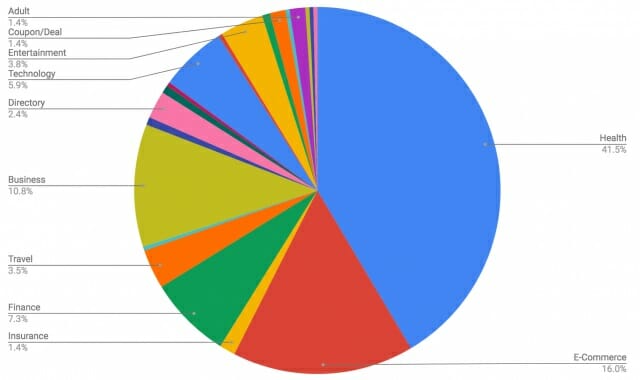

- It seemed as many of the affected sites were in the health and medical niches (hence, the “Medic” update).

- Sites across the web have experienced a severe downturn in rankings.

- Rankings were affected from page one down. (This was surprising – most of the previous updates had less of an impact on page 1.)

- A lot of big sites with enormous authority and very high-quality have also been devalued. We had speculated that this would suggest a mistake on Google’s part…

What we know now

- ‘The August Update’ affected sites from a variety of niches.

- The effects of this were particularly potent for sites in the broad health niche with subpar authority and trust signals.

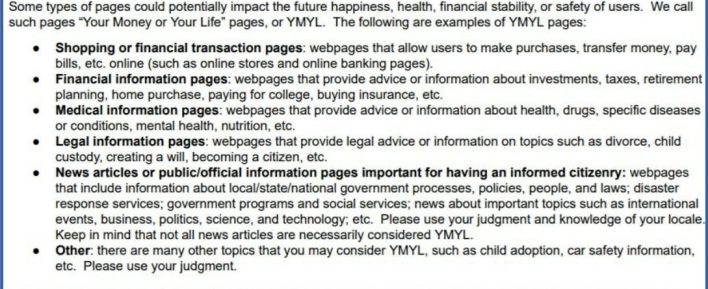

- This change has been considered by some as a deliberate step towards the philosophical vision Google had been laying out since the first mention of YMYL in 2013.

- The update accidentally coincided with an update of Google’s Quality Rater Guidelines. (The document put additional emphasis on how to EAT the YMYL sites. No pun intended.)

- The content was a very big part of the quality assessment. In particular – content cannibalization.

- The changes were seemingly there to stay (no rollbacks through the aftershocks in September – quite the contrary) and there were no quick fixes.

Quick Fix?

Unfortunately, unlike manual actions, there are no guidelines that you can follow to simply ‘switch back on’ your rankings.

An algorithmic devaluation is a product of data-driven change. It essentially means that what you were previously doing is no longer deemed the thing that users want when they search for the terms that you were previously ranking for. It no longer is the right thing.

I’m going to summarise it in a very simple statement (which became our motto):

DO ALL THE THINGS!

Let’s discuss what “all the things” are…

The Tools You Need for Auditing

Auditing websites is an iterative process.

- Collecting data

- Making changes

- Understanding the impact of the changes

- Collecting data

- Making changes

Having access to the tools required to audit a site thoroughly and quickly is really useful when there is no obvious, specific problem to fix and you need to fix everything.

Here are the main tools we used and how we used them.

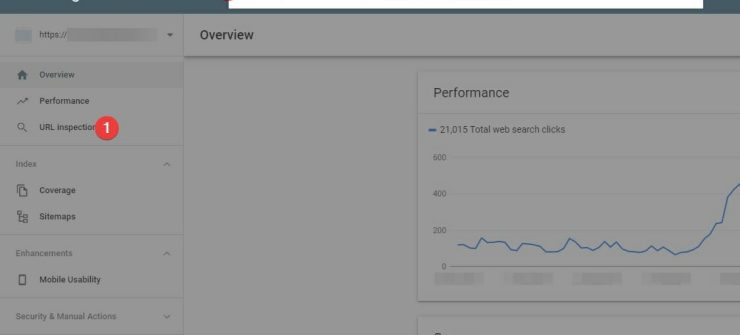

Google Search Console (The Essential)

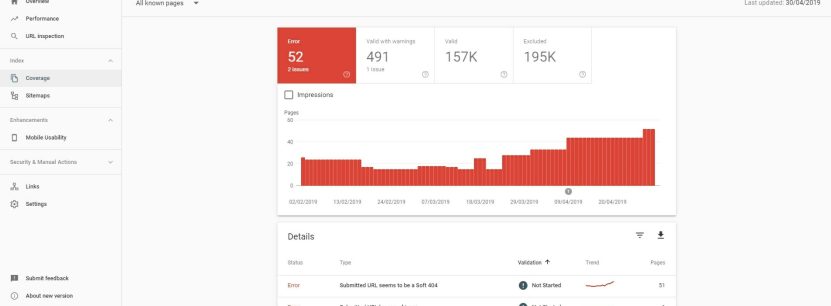

The coverage tab on the left navigation bar is your friend.

I elaborate further on the approach and use of GSC Coverage Report below.

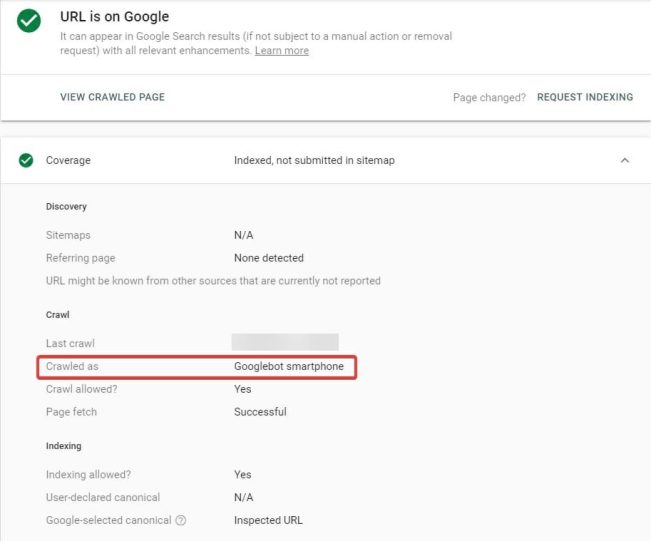

Using the URL Inspection tool you can check your site page-by-page to determine if there are any issues with the page.

Or if you just want to find out if your site is migrated to mobile first indexing:

Ahrefs (The Ninja)

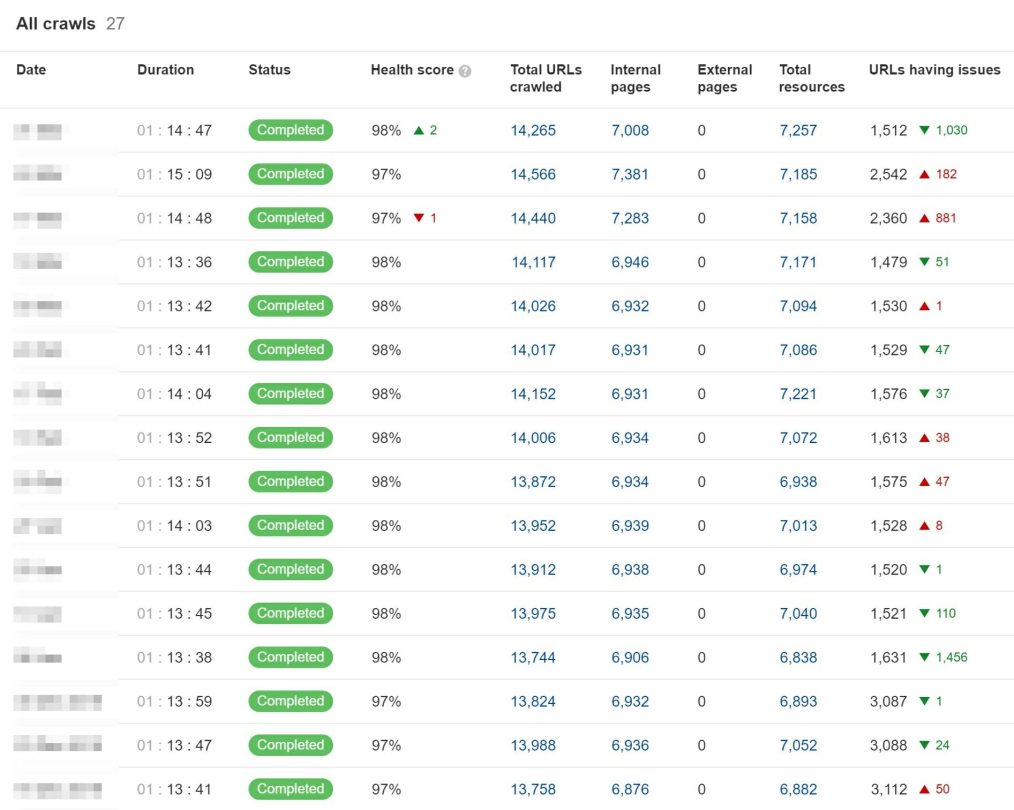

If you have an Ahrefs subscription, their new site auditing tool is an excellent way to repeatedly audit your site.

Some of the main errors and warnings you’re looking for with Ahrefs are page speed issues, image optimization problems, internal 4xx or 5xx errors, internal anchors, etc.

What I like about it the most is that you can schedule the crawl to run every week and Ahrefs will show you all the improvements (and any derailments) as they happen.

Here’s a screenshot from a client where we’ve run a weekly, periodic Ahrefs analysis and have strived to keep a health score of 95%+.

You should do the same.

Obviously, we also used our ninja tool (Ahrefs) for the link analysis (see below), but who doesn’t?

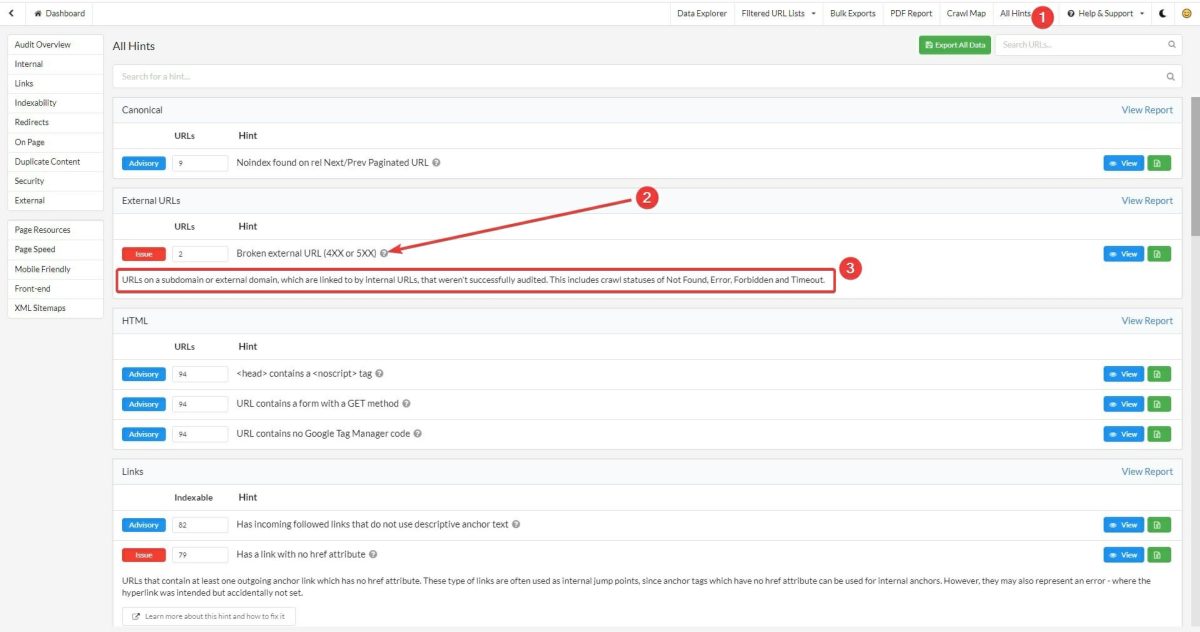

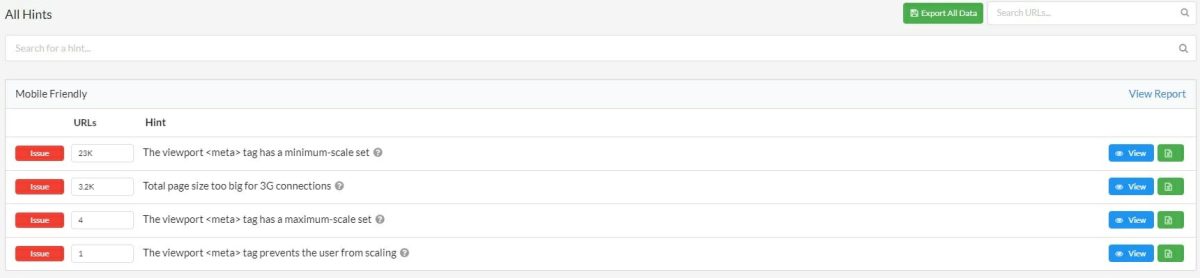

Sitebulb (New Kid on the Block)

You might recognize the name if you’ve read my algorithmic penalty case study.

Sitebulb is especially good at telling you exactly what the problems are. It’s an analysis tool as well as a crawler.

Here are some (cool) examples of the issues Sitebulb is able to discover for you:

- Duplicate URLs (technical duplicates)

- URLs with duplicate content

- URL resolves under both HTTP and HTTPS

- URLs that have an internal link with no anchor text

- Pagination URL has no incoming internal links

- URL receives both follow & nofollow internal links

- Total page size too big for 3G connections

- Critical (Above-the-fold) CSS was not found

- Server response too slow with a Time-to-First-Byte greater than 600ms

- Page resource URL is part of a chained redirect loop

- Mixed content (loads HTTP resources on HTTPS URL)

Here’s a full list of the hints you can find in Sitebulb:

These ‘Hints’ will help you with efficiency. You get insights enabling you to bundle simple issues into the overlying problem, such that you can fix them all at once, rather than individually.

My team loves Sitebulb. It has proved to be incredibly useful for the iterative auditing strategy.

If you don’t know what Sitebulb is, I would recommend you check it out.

Surfer SEO (SERP Intelligence 007)

I fell in **** with Surfer SEO the moment I started playing with it.

It’s become a staple tool at The Search Initiative.

Shortly, you’ll learn all about how to use Surfer SEO to optimize the hell out of your content.

Recovery, Optimization, and Implementation

Content

Since we knew that a big part of the update is content, we kicked off a page-by-page analysis, scrutinizing every page on the client’s site.

Pruning the content

During the last Chiang Mai SEO Conference, just 3 months after the update, our Director of SEO, Rad had shared some of the most common issues affecting the last update.

A few recoveries we’ve seen during these 3 months have suggested that one of the biggest problems was Content Cannibalization.

Below are Rad’s 10 most common issues that we had found were mostly affecting the August update:

(Lucky) Number 7: Avoid content cannibalization. No need to have the same sh*t in every article.

With that in mind, we started going through the site, post by post, restructuring the content.

You can approach it in 2 ways:

1. Consolidating pages – here you identify pages (through manual review) covering the same topics and combine them.

Once the content is consolidated, redirect the page with fewer keywords to the one with more.

A few examples of what posts you can consolidate:

- 5 Popular XYZ devices That Work

5 Best XYZ Devices of 2023

Best XYZ Devices That Actually Work

- 11 Things That Cause XYZ and Their Remedies

What Causes XYZ? - The Best Essential XYZ

Top XYZ You Can’t Live Without

2. Pruning – here you select pages matching the below criteria and redirect them to their corresponding categories:

- Very minimal traffic in the last 12 months (<0.5% of total).

- No external inbound links.

- Not needed (don’t remove your ‘About us’ or ‘Privacy Policy’ page, ok?).

- Not ranking for any keywords.

- Older than 6 months (don’t remove new content!).

- Can’t be updated or there is no point in updating them (e.g. outdated products, etc).

Strip the website from any content that could affect the overall quality of the site.

Make sure every single page on the site serves a purpose.

Improving the E-A-T Signals

Around “The Medic” update, you could hear a lot about E-A-T (Expertise – Authoritativeness – Trustworthiness).

The fact is that Google really values unique, high quality content, written by an authoritative expert. It also is quite good at determining the authority and quality, along with all the other relevancy signals.

Whether or not you believe that the algorithm (and not actual humans) can detect these E-A-T signals, we can assume that the algo can pick up some of them.

So let’s get back to doing “all the things.”

Here’s what was prescribed:

-

- Create site’s social media properties and link them with “sameAs” schema markup.

- Format the paragraphs better and write shorter bitesize paragraphs – authority sites would not make these readability mistakes.

- Do not overload CTAs – having call-to-action buttons is important, but having them in a user-friendly way is even more important!

Here’s how it looked like originally – some posts had a CTA every 2 paragraphs:

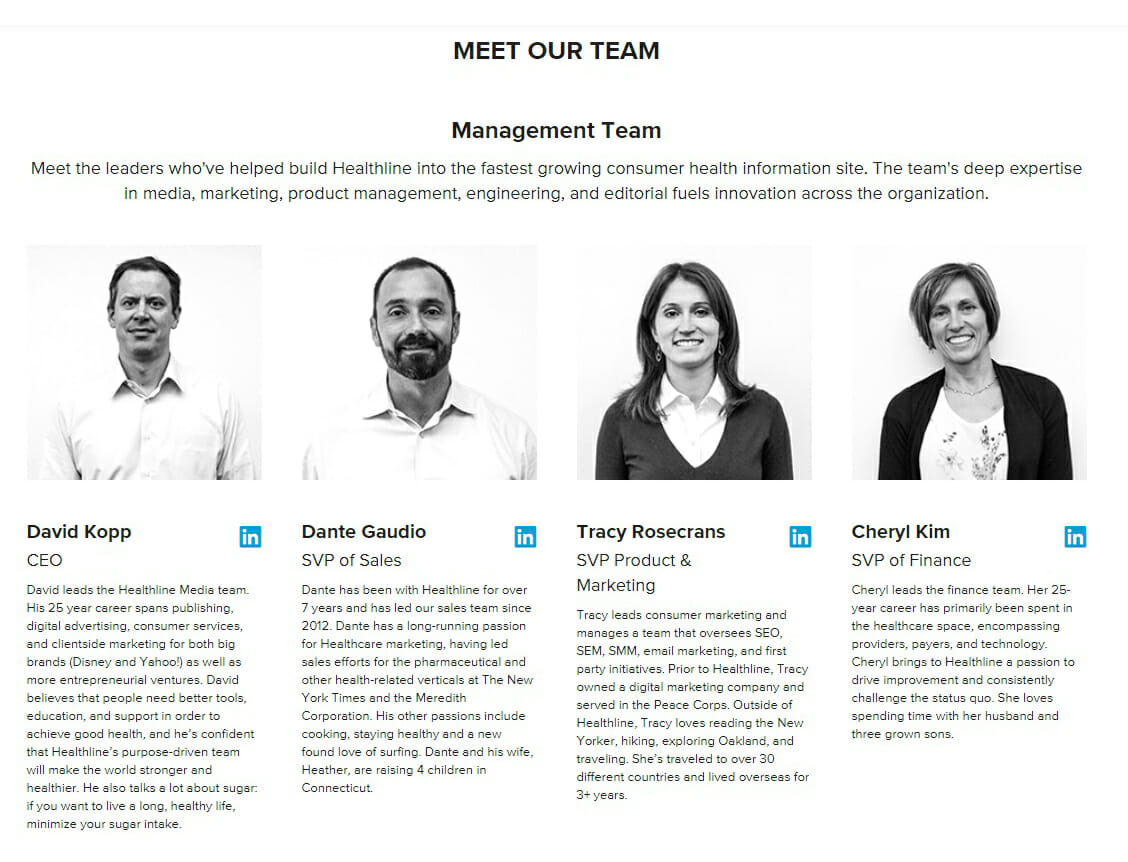

- Improve your personas – Creating their “authors” online authority helps them improve the overall site’s authority and increases the expertise.

- Link to your About Us page from the main menu – It really does not hurt!

- Build your About Us page so it proves your expertise and authority – this is a good example.

- Include Contact Us page – there should be a way for your visitors to get in touch!

Here’s a really good example (without even using a contact form!):

- Create additional Disclaimer Page – A good practice is to create an additional Disclaimer Page linked from the footer menu and referenced wherever the disclaimer should be mentioned.

- Improve your author pages – here’s a really good example.

- Improve the quality of content – The quality of content for YMYL pages (Your Money or Your Life – sites directly impacting user’s financial status or well-being) should be absolutely trustworthy.

There are quality rater guidelines for this.

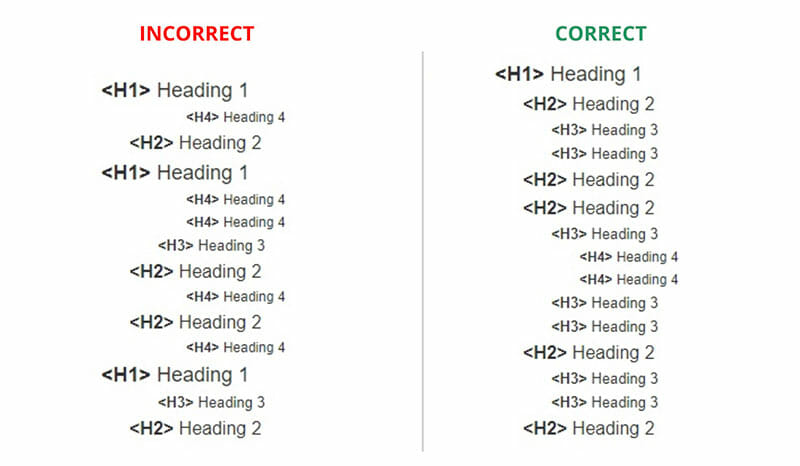

Heading Tags

On our client’s site, H3 headings on many pages were supposed to be marked as H2. Some H1 headings were marked as H2s.

Go over each of your published articles and make sure that the structure of each page is marked up properly from an SEO perspective.

It’s not a newspaper – so you shouldn’t need to always keep the correct heading hierarchy.

But please, at least keep it tidy.

It really helps Google grasp the more important bits of the content and the sections’ semantic hierarchy. Learn more about heading structure in my Evergreen Onsite SEO Guide.

Content Adjustments

The name of the game is to write the best content possible.

If you need help with this, organic seo services provide great options here.

Anyways, it requires you to audit what’s already ranking on page 1 and out-doing all of them.

This means you’ll need to do this audit periodically, as page 1 is constantly in flux.

The aforementioned tool – Surfer SEO – has proven itself to be an amazing bit of software. Without it, we couldn’t do a full, in-depth analysis like this in a reasonable amount of time.

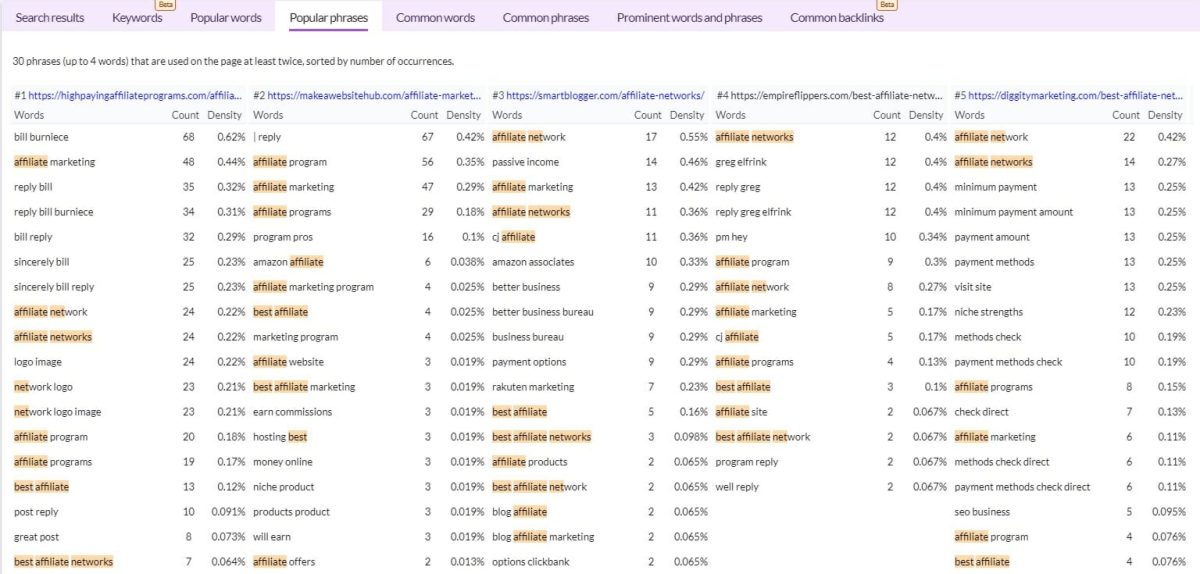

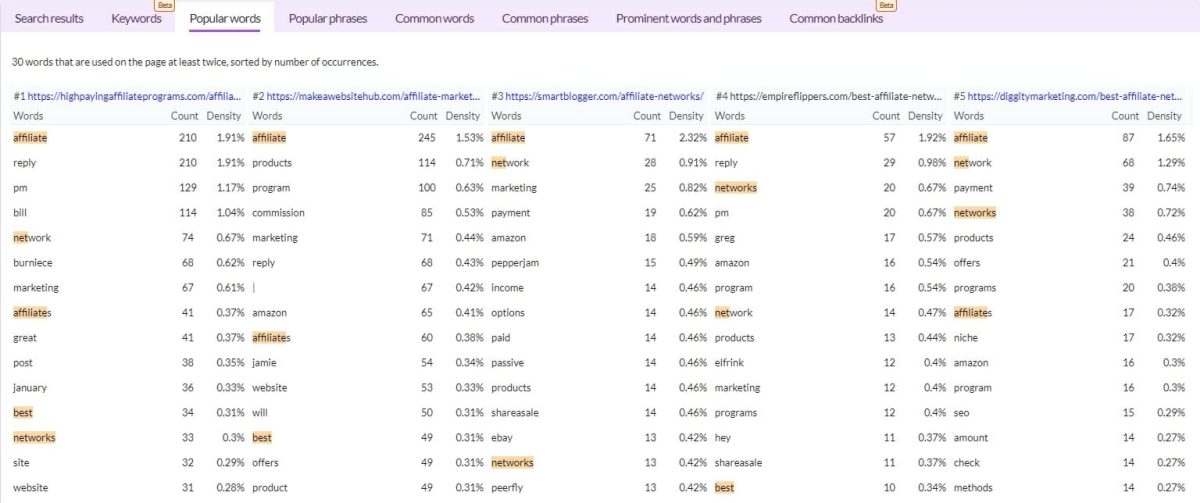

Let’s use an example keyword ‘best affiliate networks’ with 800 monthly searches in the US only.

My site is already doing well in top10, but it isn’t ranking number 1.

First, run Surfer for this keyword and set the target country as you prefer – in my case, USA.

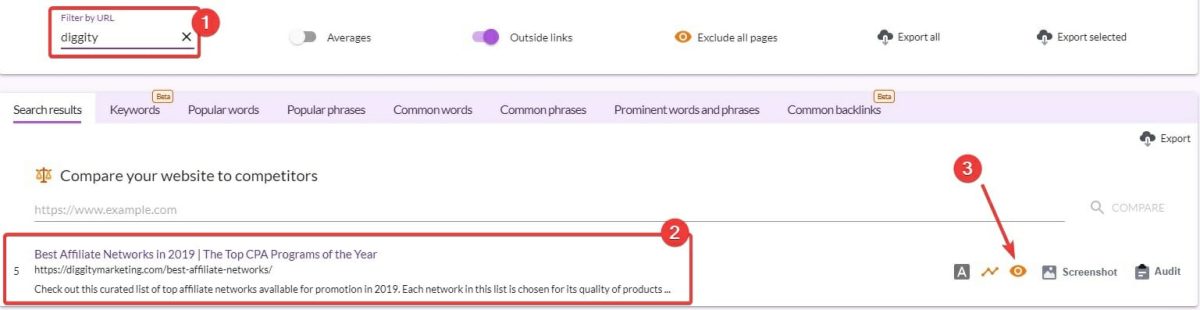

It will take a minute to run the analysis. Once it’s done, go to the report:

What you see here are:

- Ranking factors available – From the number of words on the page to the number of subheadings, each factor is measured. You can quickly see how much these factors matter for the top positions on page 1.Surfer will automatically calculate the correlation of each measurement which is presented as a little ‘signal strength’ icon next to each factor. High signal strength means that this factor is important for ranking in the top positions.

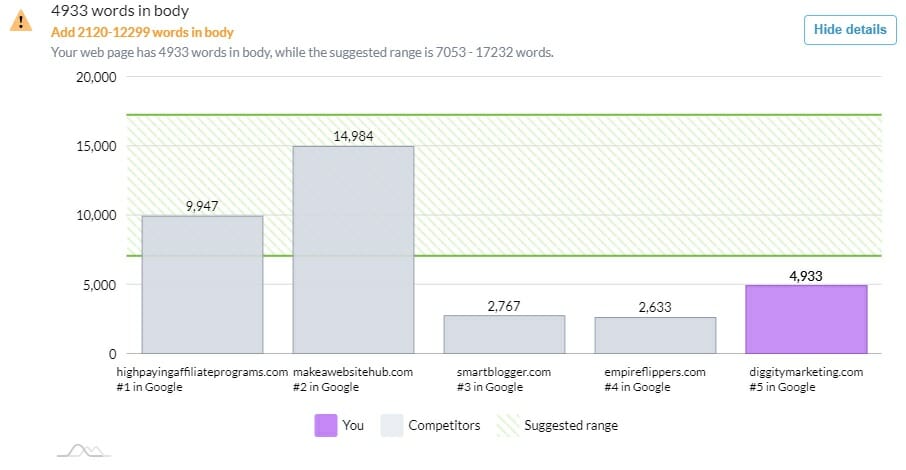

- Main chart for the selected metric – in this case, # of words in the body. As you can see from the graph, the results in the top 3 positions have significantly more words than the others.

- Chart settings – I like to have Surfer set to show the averages of 3 positions. This helps me better visually grasp the averages.

You may have noticed the line through the chart showing the value of 4933 words. It is an exact value for my site, which I enabled here:

- Type in your domain in the search field under the chart

- It should find it in the search results.

- Click the ‘eye’ icon to have it plotted in the chart.

****.

But, that’s not even the best part of Surfer SEO.

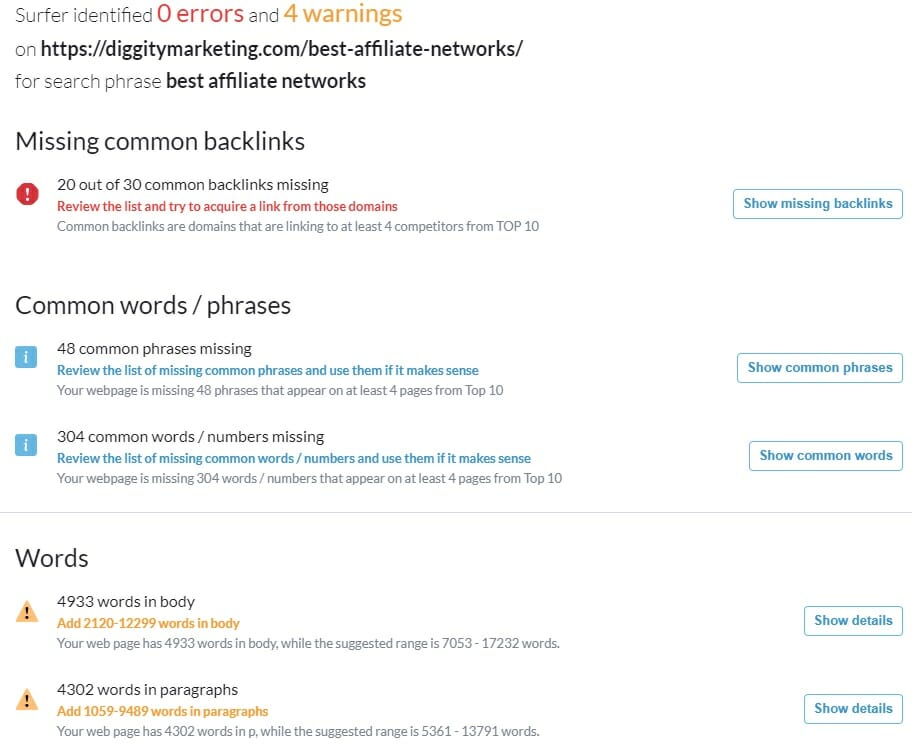

The Audit Feature – Surfer can audit your page based on the top 5 competitors and show you all the correlating factors analyzed for this page.

Here’s how it looks like for The Best Affiliate Networks page on my site:

(If you want to have a look at the full audit – it’s shared here)

I can now look at the recommendations in detail, such as the recommended number of words in the body:

The first 2 pages have significantly longer content. So first on the task list is to add some more words.

Before I’d go and do that, though, I’d want to make sure that I’m using all the common words and phrases as the other competitors.

And you can see that here, in detail:

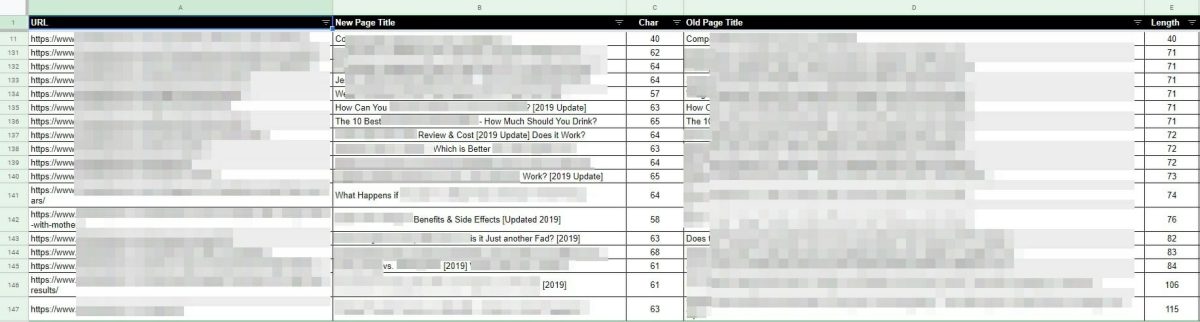

Page Titles & Meta Descriptions

Optimize your title tags to comply with the best SEO practices:

- Keep the main keyword phrase together and towards the front

- Keep the titles relatively short and to the point

- Include elements that will improve your CTR (https://ahrefs.com/blog/title-tag-seo/)

- When including a year (e.g. “Best XYZ in 2023”), remember the freshness algorithm (2007 and 2017 mentions – trust me, it’s still a thing) and ensure to keep it up-to-****

- Don’t overdo the clickbait – if you do, watch your bounce rates grow – this is bad!

- DSS: Don’t Spam Silly – obvious, right? Don’t double count keywords

- Make it unique

Make sure to fix missing, too short or too long meta descriptions as a quick win for your overall quality score.

Image size optimization

Optimize all important images with size over 100 KB by compressing and optimizing via ShortPixel. Your pages will now use less bandwidth and load faster.

![]()

Here’s the full summary from our Shortpixel optimization for this client:

- Processed files: 4,207

- Used credits: 3,683

- Total Original Data: 137.10 MB

- Total Data Lossless: 129.32 MB

- Overall improvement (Lossless): 6%

- Total Data Lossy: 88.48 MB

- Overall improvement (Lossy): 35%

Alt Texts

Each image should have an alt text – this is a dogma I don’t always go by, because what can go wrong when missing an odd alt tag?

However, alt text isn’t just something to have – it’s also a factor that can help Google understand what the image shows. It also adds crucial relevancy signals to your content.

Besides, we’re doing “all the things”, aren’t we?

In this case, we optimized images by adding more descriptive, natural alt texts. Additionally, we made sure all the images had them.

Site Structure Optimization

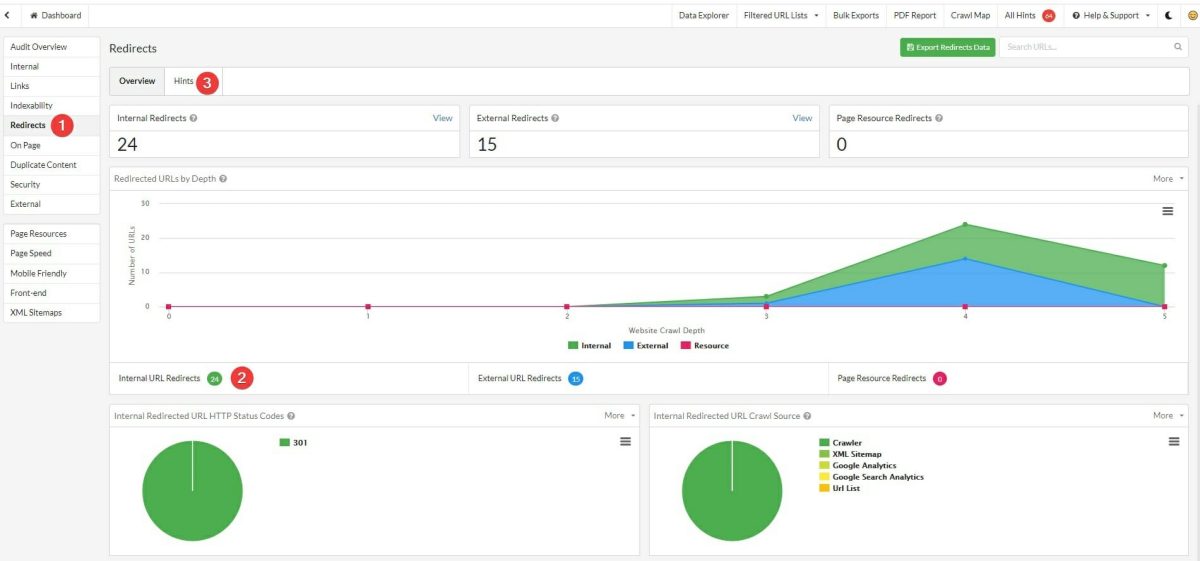

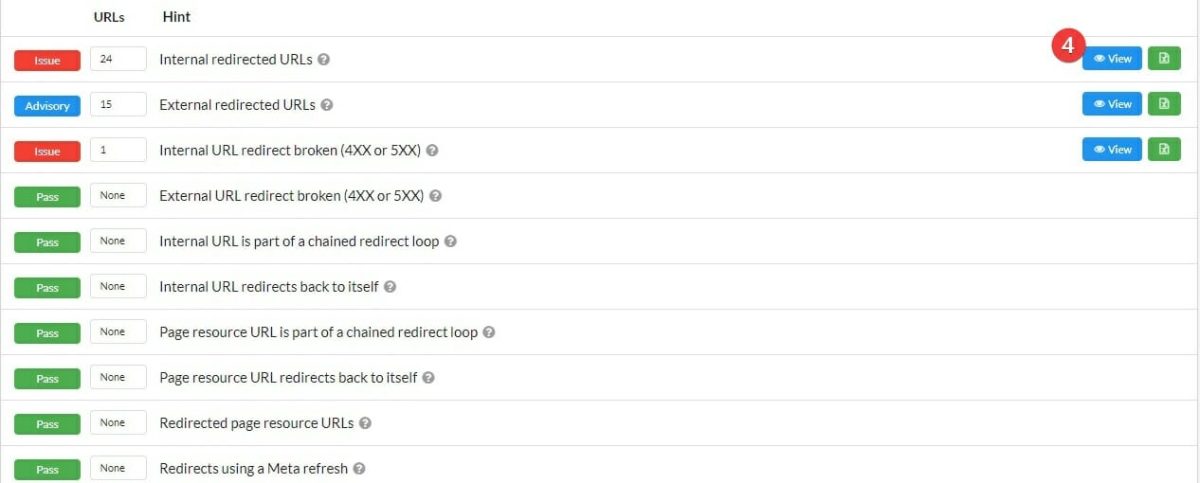

Internal Link Repair

We found many internal links that simply incorrect.

They were perhaps linking to a page that had been 301 redirected (sometimes multiple times).

Other times they were linking to dead pages (404s).

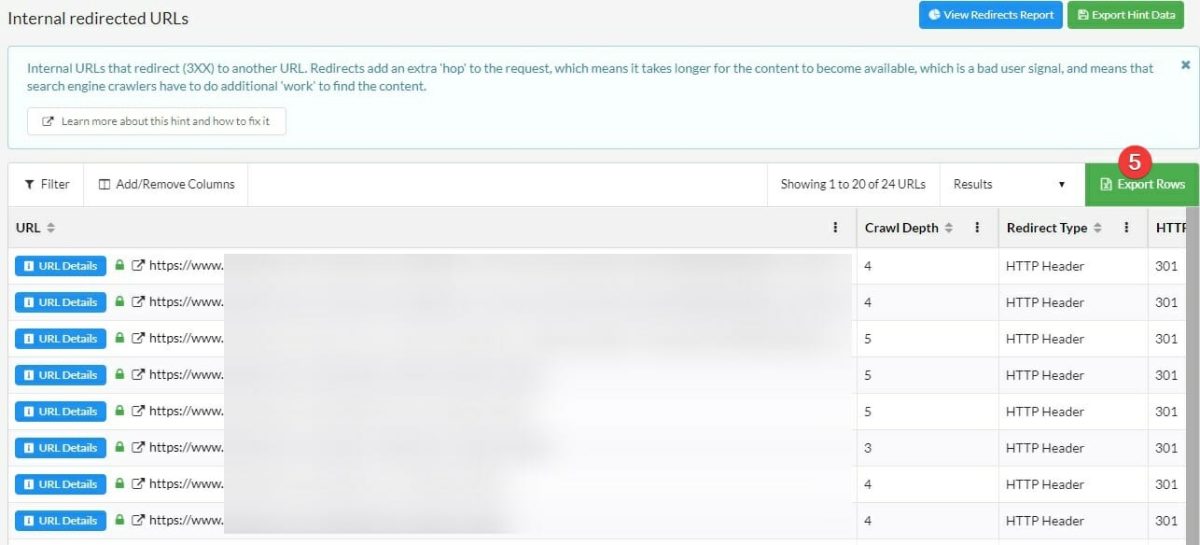

To find them, we used one of our favorite crawlers – Sitebulb:

- Go the audit, then Redirects

- Look at the number of internal redirects

- Open “Hints”

4. From the list of hints, you can now see all the internal redirects

5.Use the Export Rows button to export the data to Excel

We then fixed the broken links and updated them to be pointing to the correct pages.

Broken External Links

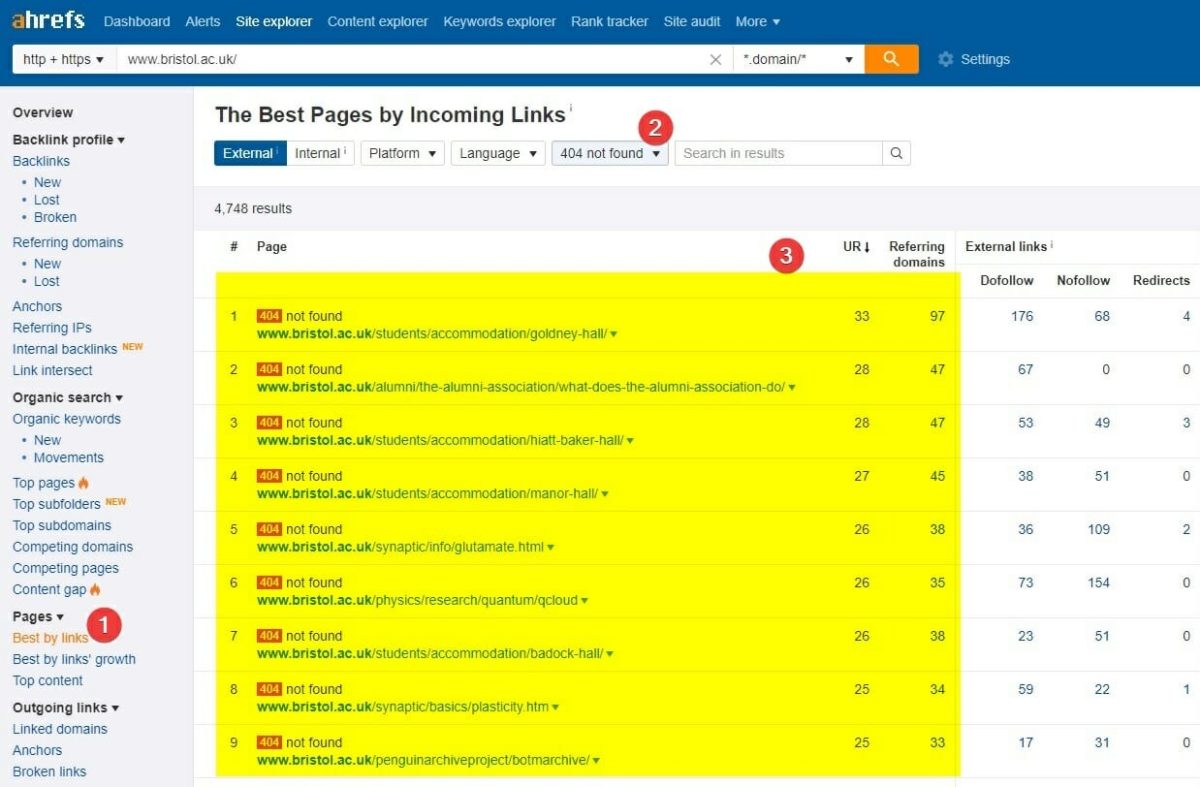

We’ve found broken URLs that had natural links coming to them.

In case of pages with a 404 status code, a link going to a page like that is a dead end which doesn’t pass any authority or SEO value to your actual pages.

You don’t want to waste that!

Here’s where to find them in Ahrefs:

- Go to Pages → Best by links

- Then select the desired status code (in our case – 404)

- The list is there!

You can sort it by the number of referring domains (in descending order), so you can see the most important ones at the top.

After that, create a redirect map with most appropriate destinations and permanently (301) redirect them to regain the lost link equity.

Technical SEO

Page Speed Optimization

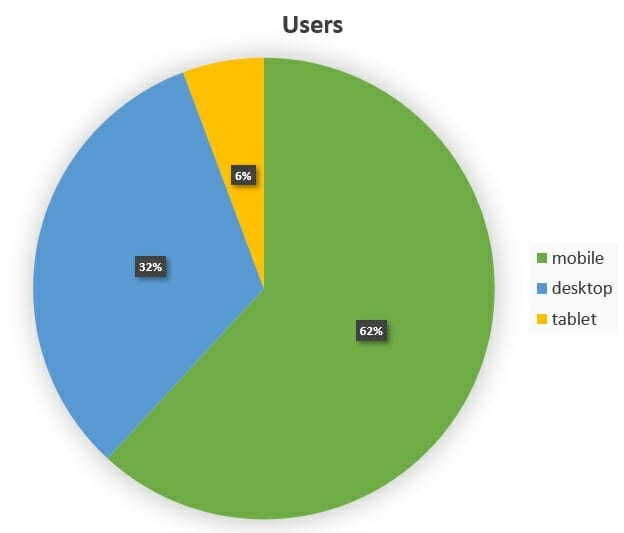

Here’s our client’s traffic breakdown by device type:

Seeing values like that, you HAVE TO focus on your page speed metrics. It’s not a ‘maybe’ it’s an absolute must.

Chances are, your site and niche are the same.

Before:

After:

What we did – the same answer: EVERYTHING

- Minified Javascript.

- Minified CSS.

- Minified HTML.

- Introduced lazy loading for images, videos, and iframes.

- Combined small CSS and Javascript files to save the round trip times.

- Improved time to first byte (TTFB).

- Optimized images.

- Introduced .webp image formats wherever possible.

- Introduced critical path CSS.

- Made above the fold virtually non-blocking.

- Introduced asynchronous loading of external resources where possible.

- Introduced static HTML cache.

- Introduced CDN.

For most of it, these plugins were absolutely priceless:

- WP Rocket – this one takes care of a lot of things from the above list.

- Shortpixel – very effective image optimization tool.

- Cloudflare – decent CDN offering a free option.

Google Index Coverage

This one was usually approached in two ways:

- Removing unwanted URLs from Google index.

- Reviewing all Errors, Warnings and Excluded lists in Google Search Console.

Google actually has a fairly decent guide to all of the issues shown in the screenshot below:

https://support.google.com/webmasters/answer/7440203?hl=en

You definitely need to look at all the errors and warnings – this is a no brainer to fix them.

However, at The Search Initiative, we’re literally obsessed with these ones:

- Crawl anomaly – these URLs are not indexed, because they received a response code that Google did not expect.We figured that any URLs here would hint to us that Google may not fully understand where they are coming from and it is not able to predict the site’s structure. Therefore, it may cause crawling and indexing issues (like messed up crawl schedule).

- Soft 404 – these URLs are treated by Google the same way as normal 404s, but they do not return 404 code.Quite often Google happens to include some money pages under this category. If it happens, it’s really important for you to figure out why they are being treated as ‘Not Found’.

- Duplicate without user-selected canonical – I hate these the most. These are pages that Google found to be duplicated. Surely you should implement a canonical, 301 redirect or just update the content if Google is directly telling you that they’re dupped.

- Crawled – currently not indexed – these are all URLs which Google crawled and hasn’t yet indexed.

There are plenty of reasons for this to happen:

- The pages aren’t properly linked internally

- Google doesn’t think they should be indexed as a priority

- You have too many pages and there’s no more space in the index to get these in. (Learn how to fix your crawl budget)

Whatever it is, for a small site (>1000 pages) this category should be empty.

- Duplicate, submitted URL not selected as canonical – this section includes all URLs for which Google does not agree with your canonical selection. They might not be identical or Google just found them to be useful if indexed on their own.

Investigate and try to drive the number of them to an absolute minimum.

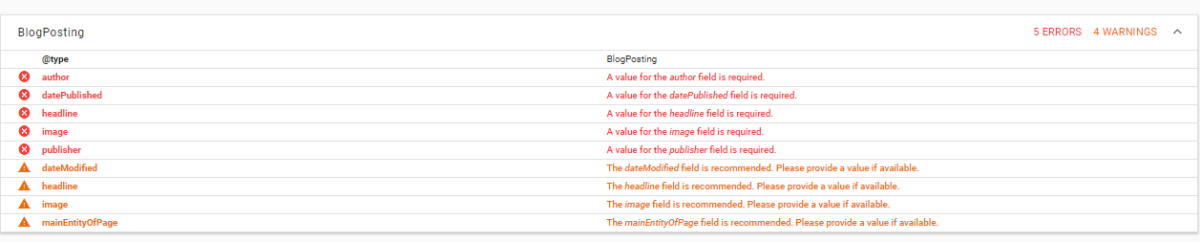

Structured data

Along the way, we had some issues with existing microdata.

You can figure out if you have issues by tossing your page into Google’s Structured Data Testing Tool:

To fix these, we had to remove some markup from the header.php file in WordPress.

We also removed the comment markup from the posts – the posts did not have comments enabled anyway.

After fixing the issues… we decided to implement review markup for the review pages.

The team tested a few plugins (including kk Star Ratings which is dead easy to implement), but we ended up implementing JSON-LD markup ourselves.

My next blog post will be all about schema markup for affiliate sites so come back for that soon.

Usability

Google Search Console reported tons of site errors on desktop and small screen devices.

These need to be reviewed, page by page.

Typically these are responsive design issues which can be fixed by moving elements around (if they are too close), increasing/decreasing font sizes and using Sitebulb’s mobile-friendly suggestions.

All errors reported in GSC were marked as fixed. Then we monitored if any would reappear.

Crawlability

All website sections and files that did not have to be crawled were blocked from the search engine bots.

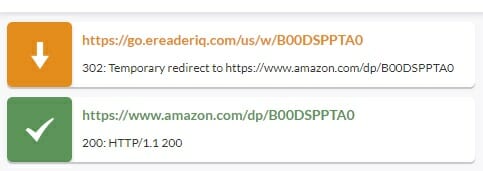

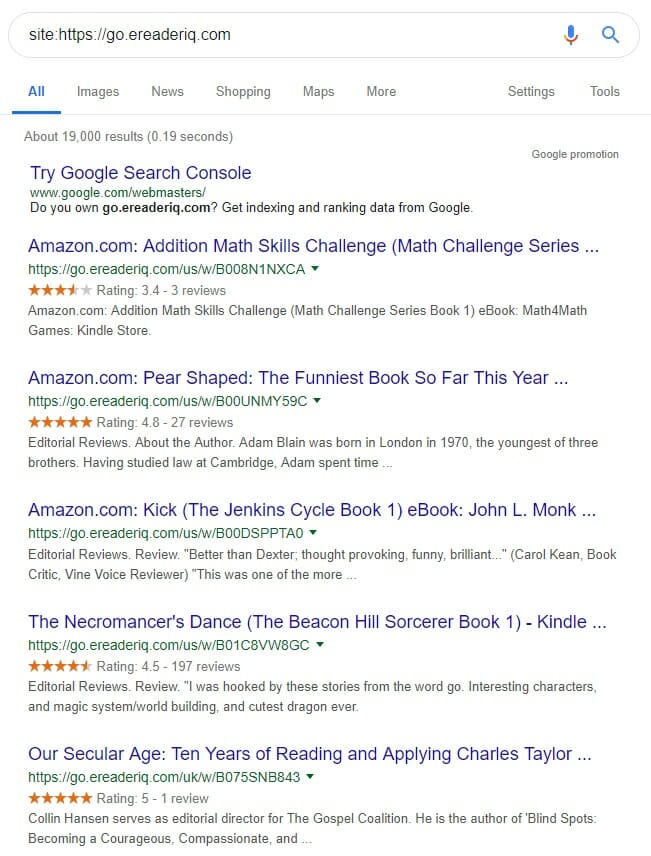

One of the biggest issues we encountered during the early stage of auditing was the fact that all of the affiliate links were using a 302 redirect.

Here’s an example of how it looks like in Chrome using the Redirect Path extension on a randomly picked site:

If you’ve been in the affiliate world for quite a while, you know that ‘friendly affiliate links’ (like /go/xyz-offer) usually work slightly better than the ugly ones (like https://go.shareasale.com/?aff=xyz&utm_source=abc). This is especially true when you’re not linking to a big, well-known site like Amazon.

Also, affiliate programs always use some sort of a redirect to set a cookie, in order to inform them that the commission should be attributed to you.

This is all OK, but…

What is not OK?

Don’t use Pretty Links with a 302 redirect.

Never, never, ever, ever use 302 redirects, ever. What-so-ever!

This is simply an SEO sin!

What 302 redirects do… they make Google index the redirecting URL under your domain. Additionally, Google can then attribute all the content from the page you are pointing your redirect at – right back to your redirecting page.

It then looks like this under your site:

Guess what happens with all this content under YOUR domain?

Yes, you’re right – it’s most likely treated as duplicate content!

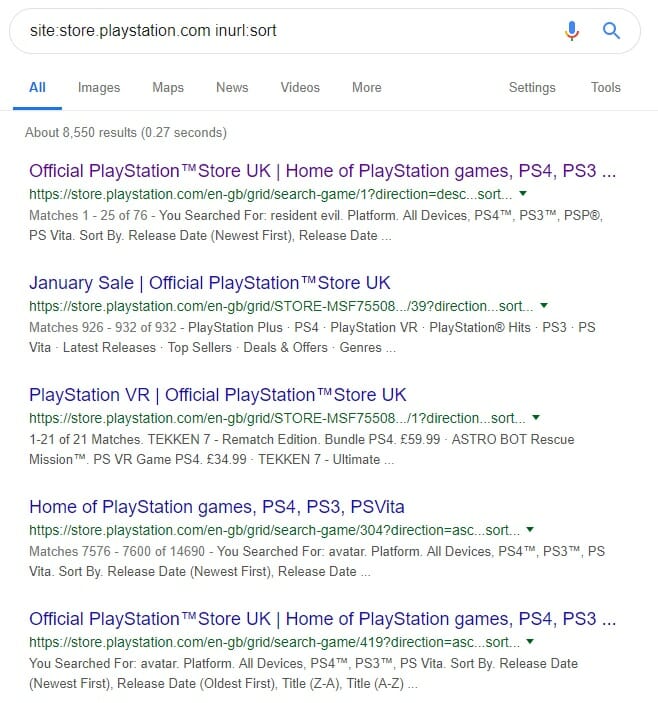

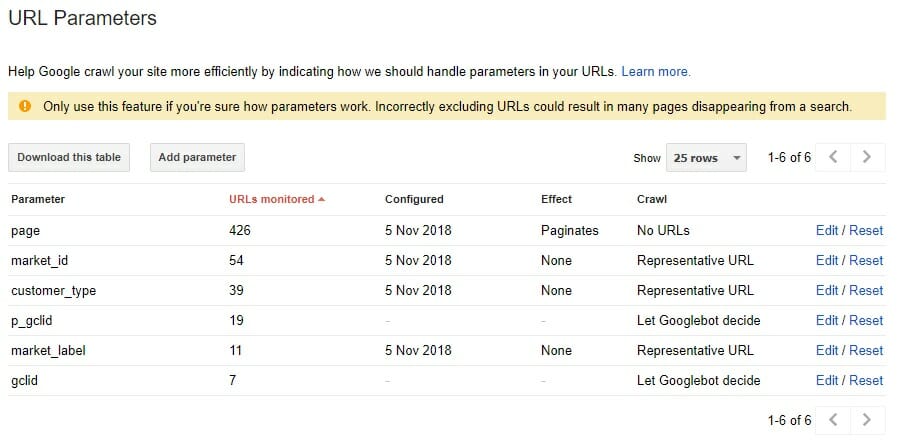

Reconfigure URL parameters in GSC

Configuring your URL params is a great way to allow Google to better know what’s going on on your website.

You’d want to do it when you have certain pages (especially in high numbers) that are noindexed and Google should know straight up that there is no point indexing them.

Say, for example, you are an ecommerce website and your categories use the “sort” URL param to define the order way (best selling, newest, alphabetical, price, etc). Like Playstation Store here:

You can tell Google straight up that it doesn’t need to index (and crawl) these URLs.

Here is how you do it in Google Search Console:

Go to (old) GSC → Crawl → URL Parameters and you should see something like in the below screenshot.

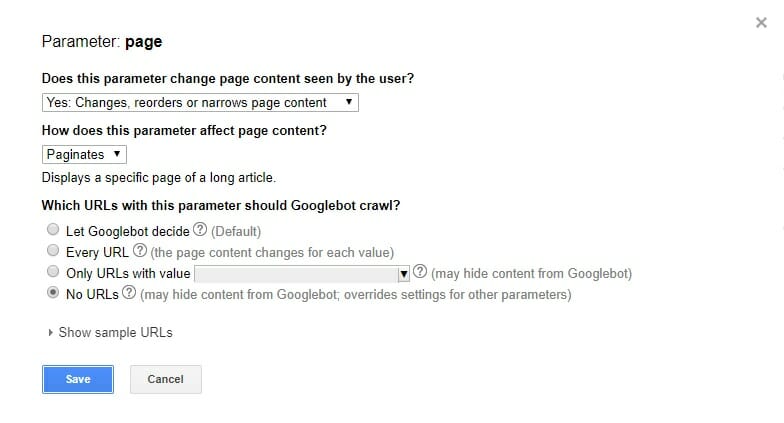

To amend any of them, click edit and a small pop-up will appear – similar to the one shown below.

To amend any of them, click edit and a small pop-up will appear – similar to the one shown below.

All the available configuration options are:

All the available configuration options are:

- Does this parameter change page content seen by the user?

- Yes: Changes, reorders or narrows page content

- No: Doesn’t affect page content (e.g. tracks usage)

- How does this parameter affect page content?

- Sorts

- Narrows

- Specifies

- Translates

- Paginates

- Other

- Which URLs with this parameter should Googlebot crawl?

- Let Googlebot decide – I wouldn’t use this one unless you’re 100% sure that Google will figure it out for itself. (Doubt it…)

- Every URL

- Only URLs with value

- No URLs

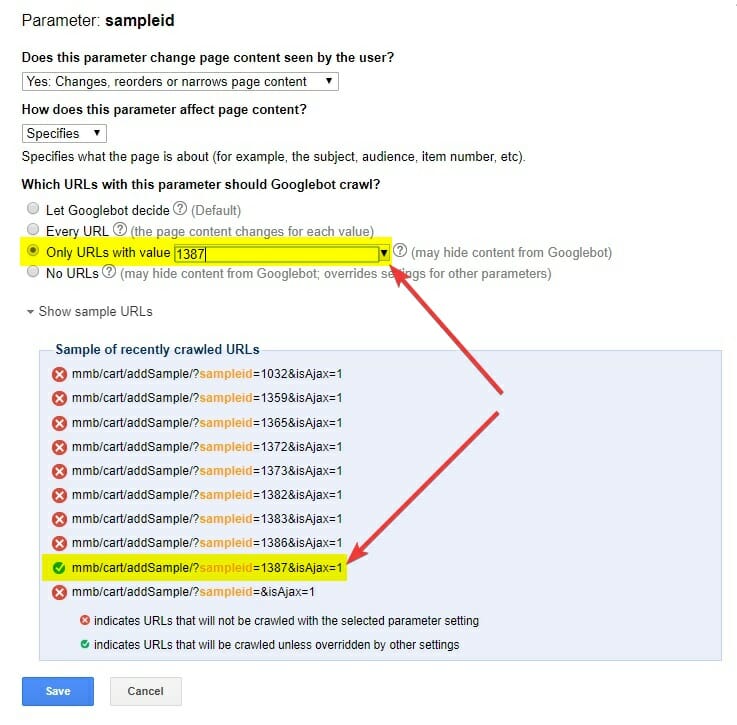

Don’t forget to have a look at the examples of the URLs Google is tracking with each parameter. The changes you select in the form will reflect which URLs will or will not get indexed.

Here’s an example of another client site, where we only wanted to have 1 specific value for the sampleid parameter indexed:

Putting it into Action

The above list of action items is diverse and comprehensive. Conveniently, The Search Initiative team is well set up to meet the requirements of a project like this

With the use of our project management software – Teamwork – we create a game plan and quickly roll out the work.

Here’s a screenshot of an example campaign where there’s a lot of SEO moving parts involved concurrently:

When it comes to auditing and implementation, having high standards is the key. Solving a problem with a mediocre job is worse than fixing a less important issue correctly.

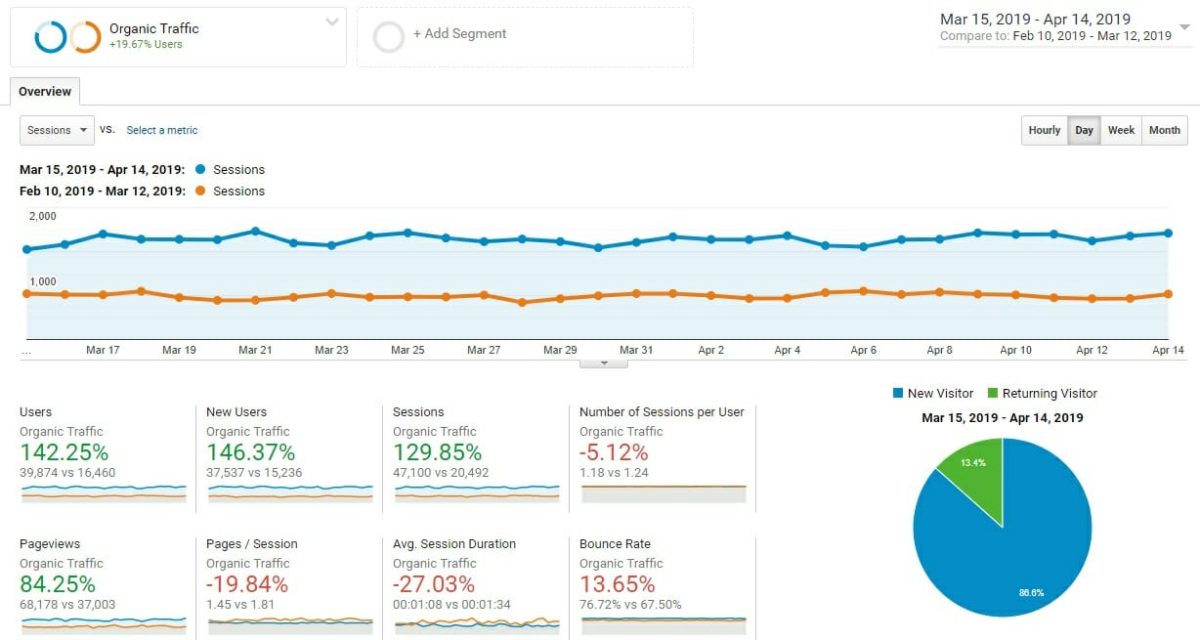

The Results: Algorithmic Recovery

So… What does returning to the SERPs look like?

Through the iterative approach after a series of cycles, the client was well set up for some gains next time the algorithm rolled out.

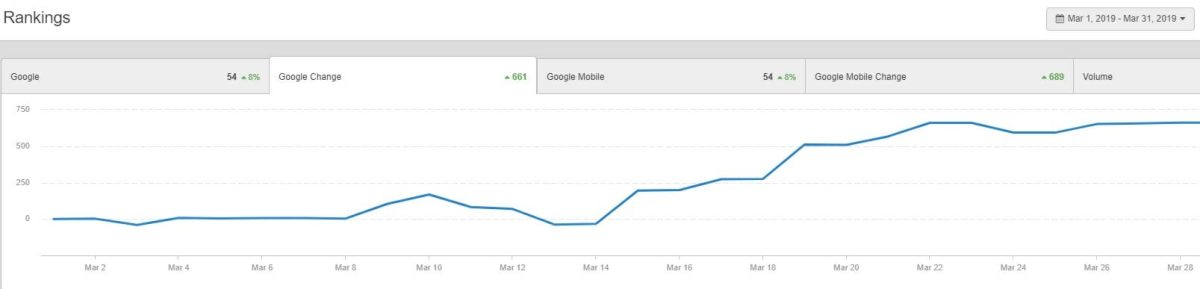

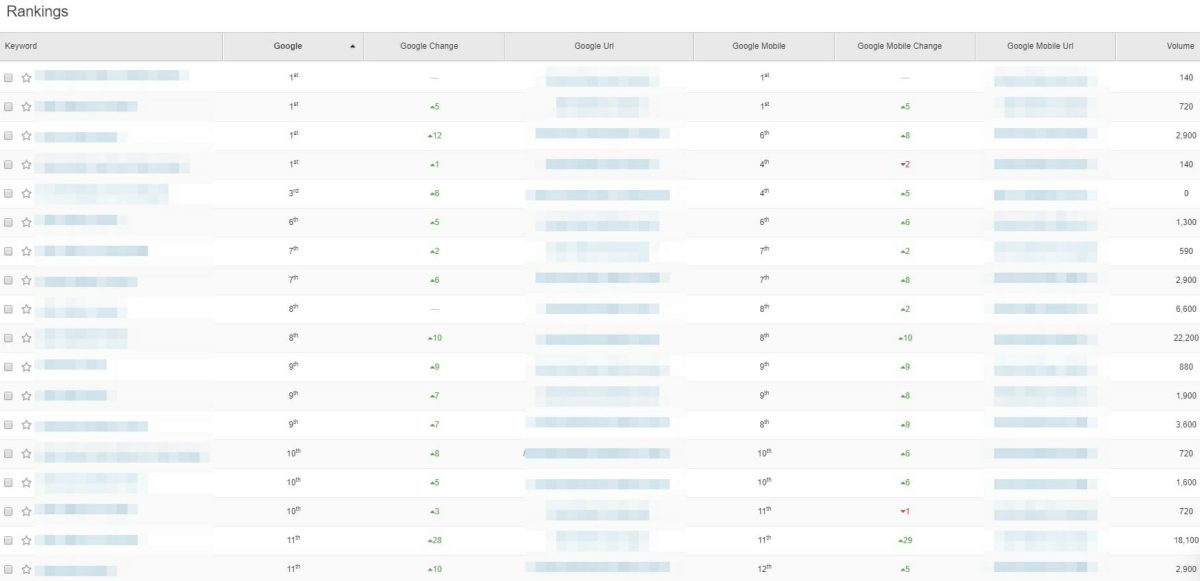

This increase in keywords happened over a 1 week period:

And we saw an increase of the rankings across the board – keywords jumped to the top positions, new keywords started ranking from nowhere… everything.

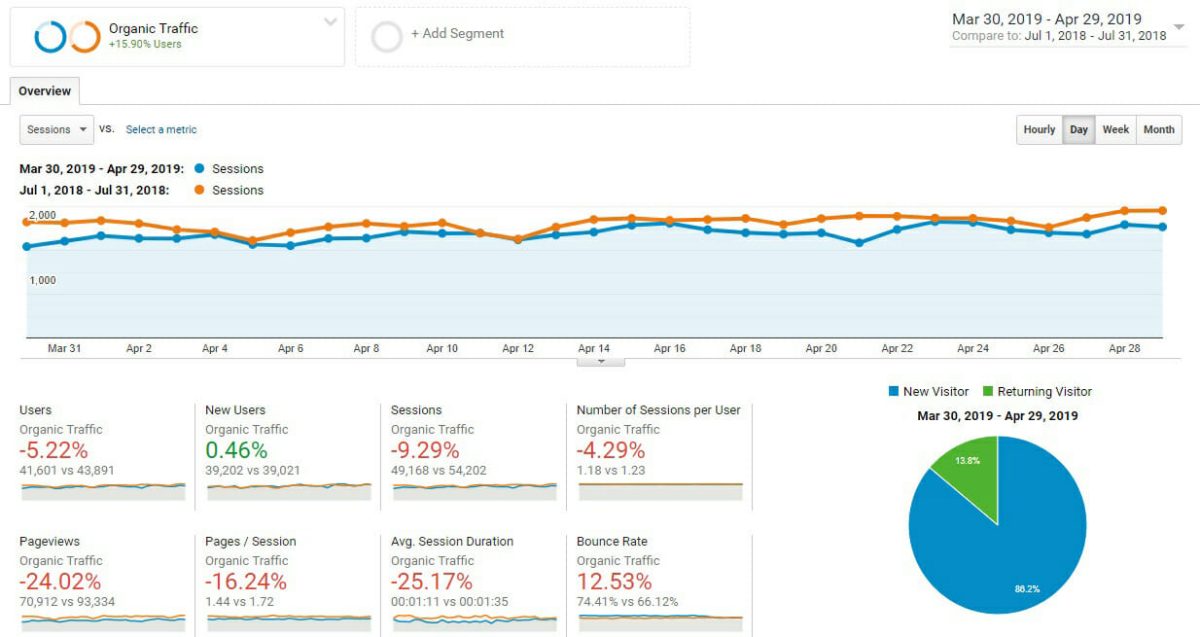

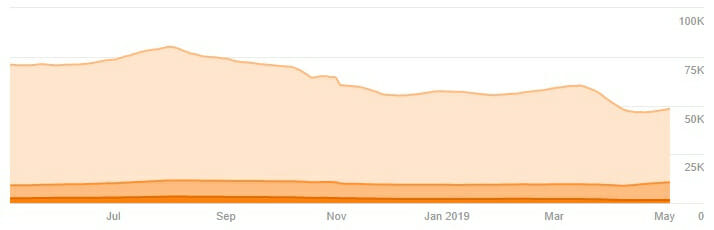

Here are results in a pre-Medic update and after-March update comparison. We’re caught up and in a good position to hit record traffic next month.

And here’s Ahrefs graph to visualize the fall and rise:

In the above screenshot I mention the Compatibility Stage on purpose because every project at TSI splits into three triannual periods:

The Google Compatibility Stage – typically the first 3-4 months.

It involves us setting the foundations of the campaign, prepping the site for more significant increases in results.

Or, like in this case, we do all we can to regain the traffic after any unpleasant surprises from Google.

The Google Authority Stage – for an average campaign, this stage occurs in months 5-8.

Here we begin targeting higher competition keywords.

The Enhanced Google Authority Stage – usually eight months to a year.

It is when we leverage the authority already established by increasing the number of pages ranking in the high positions. We optimize pages for further conversions and revenue increases.

It took a few months to fully benefit from the work that we had put into the campaign. Many site owners are not so patient and don’t always want to wait until this glorious moment.

But that’s a good thing. It makes things easier for those of us that stick it out.

In our case, the recovery coincided with a second major algorithm update on March 12th.

Of course, you may read it and say: “Hey, this is the algorithm rolling back. You could have done nothing and gotten these gains.”

Here are their competitors that did nothing:

Competitor #1

Competitor #2

Competitor #3

Summary

As the SEO world evolves and Google gets more and more sophisticated, these core quality updates will inevitably become more frequent.

This case study provided you first-hand insight from The Search Initiative on how to set your site up to actually benefit from future algorithmic updates.

- You have learned about Google’s numerous changes and periodic algo updates.

- You’ve also found out about the various steps of the process of auditing and improving your site.

- But, most importantly, you have seen that hard work, thorough analysis, and long term commitment always pay off.

While the SEO strategies employed are vital to the continued success of any campaign, there is one more important piece – the human element.

If you don’t stick to the plan and be patient, you’re not likely to see the fruits of your work.

Despite the burning desire to quit, you should always be ready to …

… BE PATIENT AND FIX ALL THE THINGS.

Get a Free Website Consultation from The Search Initiative: