ChatGPT is undeniably a remarkable tool for generating coherent and contextually relevant text, particularly when it comes to the vast array of information available up until its last training cut-off in January 2022. Its proficiency lies in its ability to synthesize information and respond to prompts, showcasing an impressive understanding of diverse topics. However, there are limitations when it comes to incorporating proprietary or dynamic data from a company’s internal systems. System’s like Sitecore where an organization’s content, taxonomy, page and layout definitions are stored.

Both Open AI and Azure’s Open AI services allow organizations to upload additional data to be referenced by the language model , but this approach does not work well with large and dynamic datasets. The sheer volume and fluidity of the information make it challenging to keep the model up-to-**** and may hinder its ability to provide accurate and current responses. This would not be a viable approach for making Sitecore data available to prompts, as not only does it include a large amount of data, that data changes as frequently as content authors update and publish new content. Keeping GPT apprised of this data would not scale well.

One approach to solve this problem, is to provide the prompt with contextual data before asking it to generate content. Then the language model would be able to reference that data when generating the output you are looking for. With this idea in mind, I decided to build a proof of concept that could provide a ChatGPT model with contextual data from Sitecore in order to let us ask questions about it.

Creating a Sitecore Context for ChatGPT

The first problem you face when thinking about providing contextual information from Sitecore is what information do you need to provide. You can try to provide everything, but where do you stop. You can provide details about a Sitecore item, it’s fields and values. You can look at all the linked items and their fields and values. You can look at renderings, their parameters. You can look at the template details and standard values. While giving a full data dump every time you open a new prompt is possible, most of that data would not be relevant to what you ask it and you’ll end up wasting tokens and increasing your API bills with very little benefit.

The data you need to provide it really depends on what question you want to ask it. So instead of looking at a one size fits all approach to providing context to ChatGPT, I sought to create the ability to define prompts that are dependent on a custom context that can be whatever you need them to be. That context needs to be customizable and flexible so that you can provide just the data you need for that particular prompt.

To make this work I used my favorite architectural pattern Sitecore provides: pipelines. By defining a custom pipeline for creating a prompt context, we can create reusable custom prompt processors that produce Context content that can be provided to a chat session. These processors can fetch details about an item, traverse the Sitecore tree, even read files off of the file system.

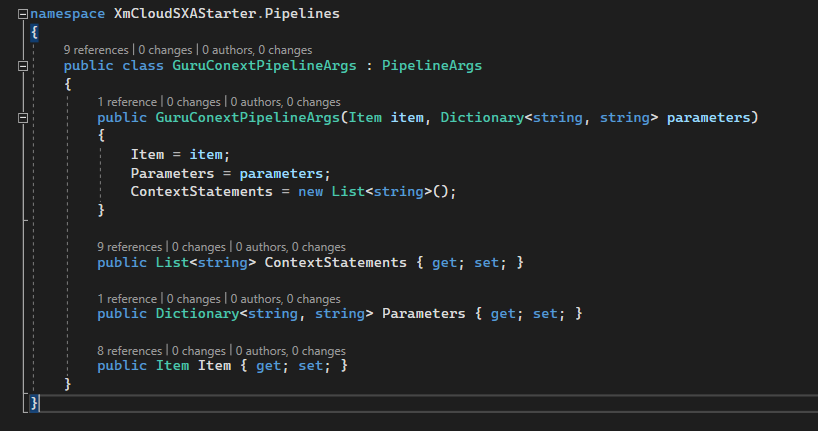

The processors are provided an argument class that give it what it needs to process, and a way to provide back the context statements back. For this I created my own custom PipelineArgs class, that would get initiated before calling the pipeline passing in the Sitecore Item to analyze and any configuration needed to allow the pipeline processor to work. Each processor can use those inputs and then add one or more statements to the “ContextStatements” string collection, which would then be passed as system chat messages.

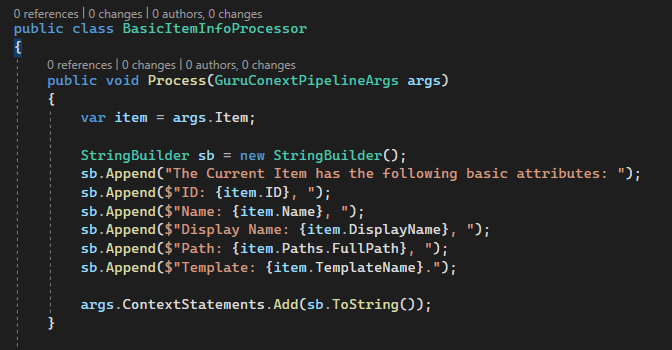

Processors can be built to be as specific as needed, and can be reused in different pipelines that create different contexts for different prompts. Here is a basic one that just adds basic details about the Item to the ContextStatements collection:

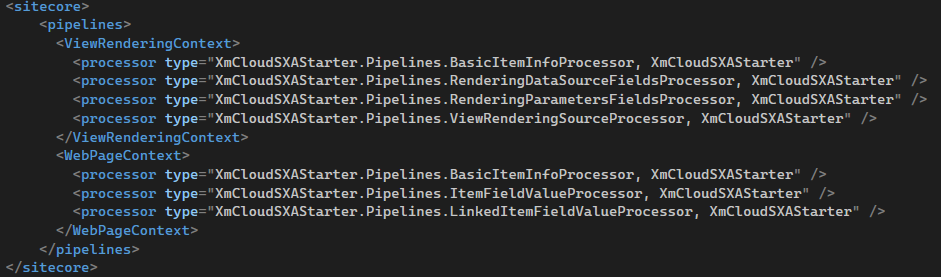

Once you have a bunch of processors you can configure a pipeline by listing each processor in the order you want them to execute. Here’s the configuration for two different context pipelines, one for basic content details and one that provides details about a rendering assuming the item selected was a Rendering definition.

As you can see both pipelines use the same basic item info processor, but the WebPageContext pipeline, also adds the ItemFieldValueProcessor and LinkedItemFieldValue processor to include details about fields in the web page and any linked data sources to that web page. The ViewRenderingContext uses processors to get details about that rendering definitions Data Source Template and Rendering Parameters and even will pull the source file for an MVC cshtml file from disk.

Over time you can imagine a large number of custom processors that provide details a chat context that will allow a prompt to do whatever you can think of. One thing to keep in mind as you create them is to be as succinct as possible. When calling GPT API’s you are charged by the token, so only include details if they are really needed.

Configuring the Pipelines and Prompts

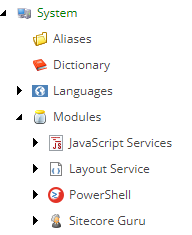

Now that we have the ability to define prompts that provide a Sitecore Context, we need a mechanism to invoke those pipelines and provide configuration to those pipelines for processors that require it. To support this, I created a configuration area under “System > Modules” for my new module, which I am calling “Sitecore Guru” (Notice the Albert Einstein Icon I used).

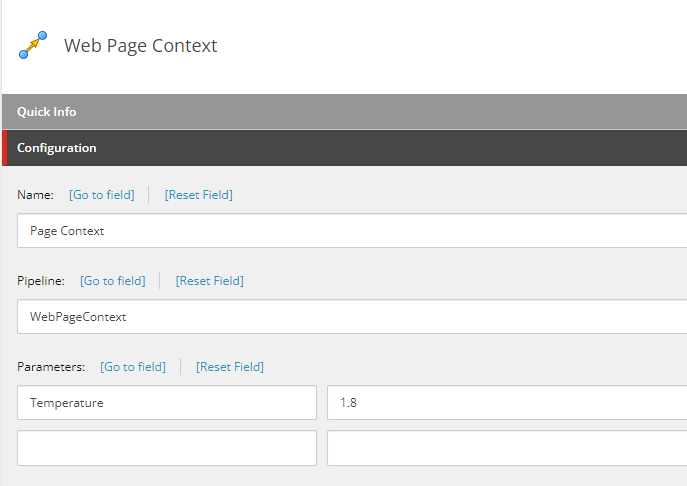

Under here, I have folders for both Pipeline Configurations and Prompts, which I separated out allowing you to mix the same pipeline with different prompts depending on your requirements. Here is the configuration for the “Page Context” pipeline described earlier:

Note the name of the Pipeline must match what was been added to the Sitecore config file. In this example, Temperature is being passed as a parameter. This will be used by the API that calls ChatGPT as the temperature setting tells ChatGPT how creative it should be with its responses. The scale is 0 – 2, with 2 being the most creative and 0 being the least creative.

So if you are trying to generate creative content, put it closer to 2, but if you are trying to generate code, closer to 0 would be better. Keep in mind that creative responses also increase the chance for hallucinations.

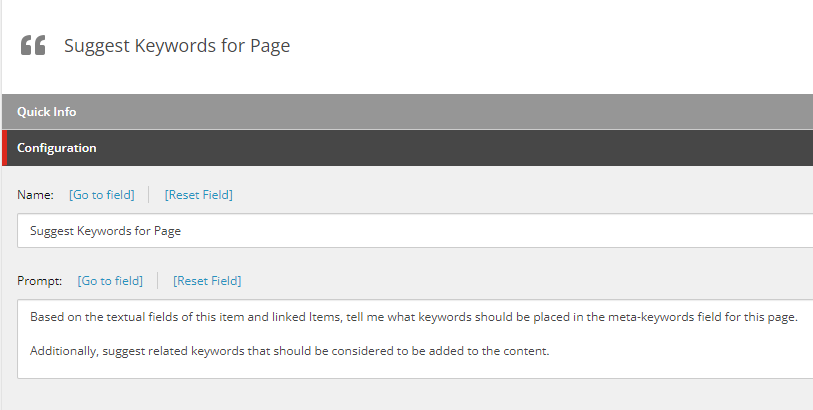

The prompts are just an item with a Multi-Line Text Prompt field, allowing us to define the initial text to open the conversations with. Here is a prompt I wrote to work with the web page context to get a list of keywords and related keywords based on the web page content fields.

With these configurable items, I was able to build a new Custom Web API that takes the ID’s of the Item, the Prompt and the Pipeline, call ChatGPT and return the results. Now I just needed to build a User Interface to call the API and provide a way to call it from the content editor.

Creating a Prompt User Interface

For the integrated prompt interface, I was able to find plenty of examples online of how to create a ChatGPT like interface. In the end, I took most of the markup, CSS and JavaScript from a coding Nepal article titled: “How to create your own ChatGPT in HTML CSS and JavaScript.” With this as a base, I modified the JavaScript to call my custom Controller API, swapped out the images with “Sitecore” related icons, and adjusted the code that persisted the chat history between sessions.

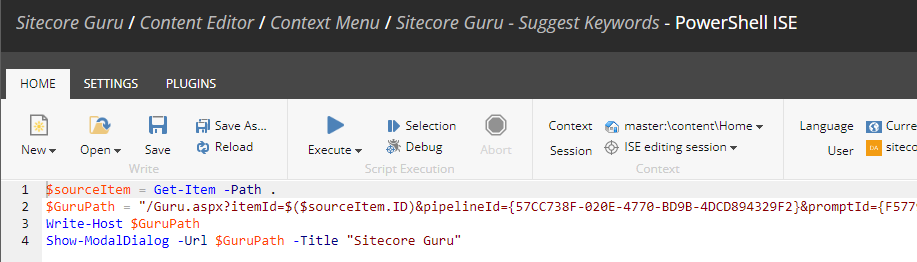

I put the main markup into a new aspx page called “Guru.aspx” and included it in my solution to be deployed into the site folder. To invoke this page with the right context (the item ID, the pipeline Id and prompt ID), I used Sitecore Powershell extensions to add a new right click option to the “Scripts” menu of the item.

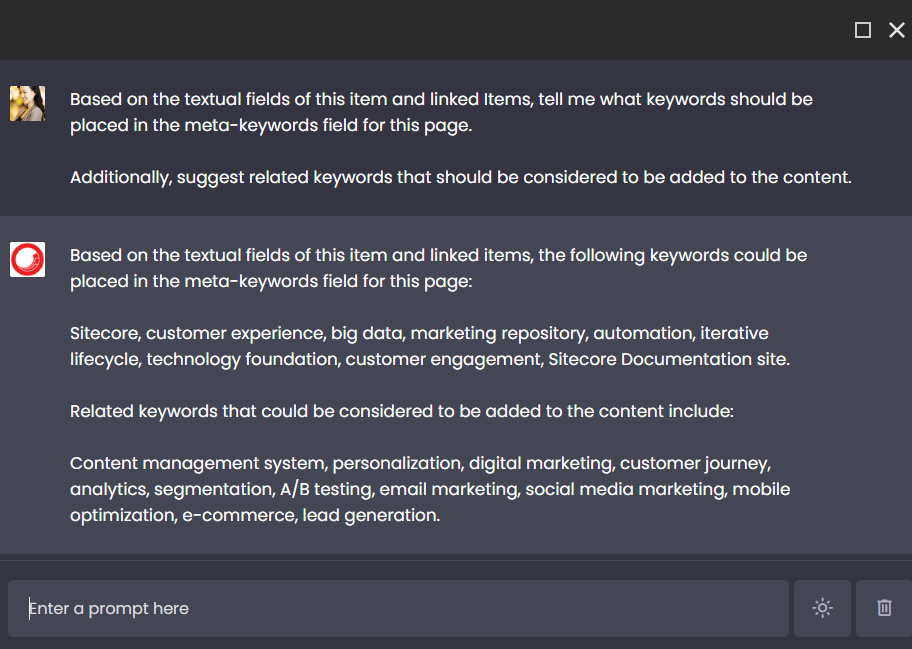

With this approach we can create multiple menu options for each prompt that we wanted to make available. The Powershell, Show-ModalDialog function loads our custom page in a frame right on top of the content editor. Here is the UX after invoking the keywords prompt using the Web Page Context pipeline on the out of the box Sitecore Home Item:

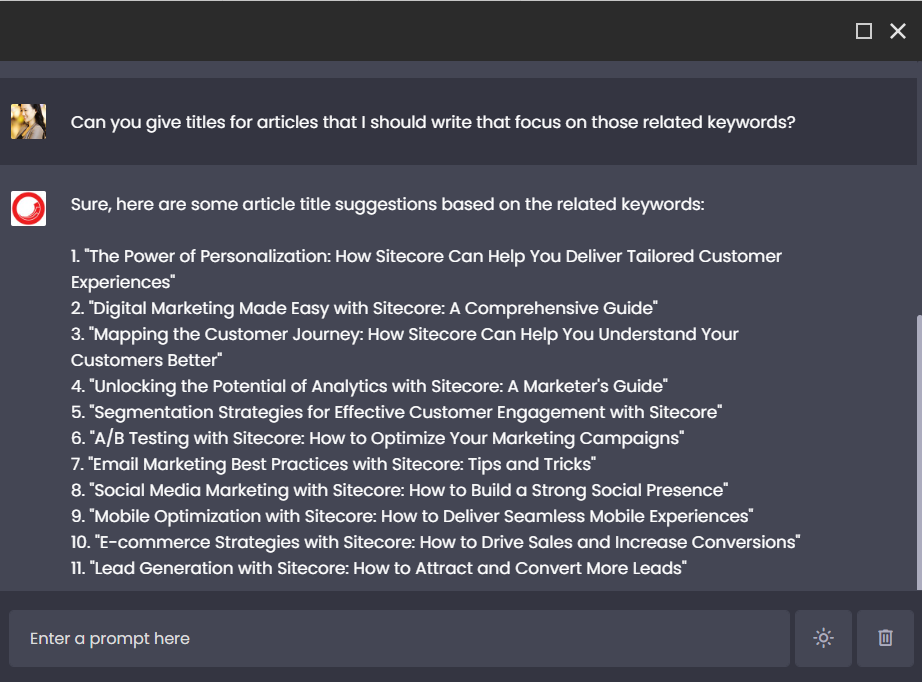

The API itself maintains a session. This allows the conversation to continue past the initial prompt. So you can continue to ask questions and get results that are aware of the chat session. Here’s an example of a follow up question after getting the initial list of keywords:

Code Generation Applications

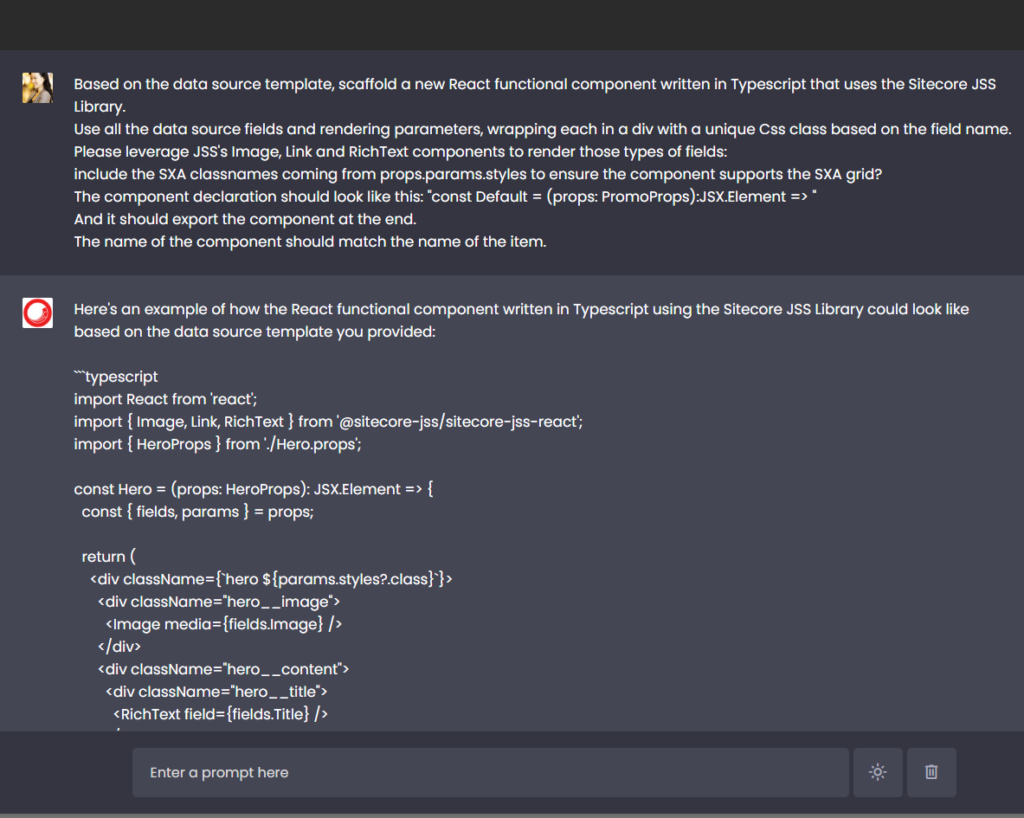

Besides proving context for content/marketing related applications, the other major use case I wanted to build this integration for was to support code generation. I figured that Sitecore usually has everything you need to know to generate a component: The Rendering Name, the Data Source Template, the Rendering Parameters, even the ability to go look at existing MVC cshtml files or decompile controller classes.

With this in mind, I built a pipeline to provide rendering configuration details, and engineered a prompt to scaffold out a Typescript component.

While it technically works, I’m not happy with the quality of the output. I will continue to refine the prompt details, but also need to start working on sample data to train the model on the structure of the code I expect. The documentation I’ve read said I need to create at least 10 good examples, but ideally 50 to get good results. This is something I’ll continue to work and tune to see if it improves the generative AI output.

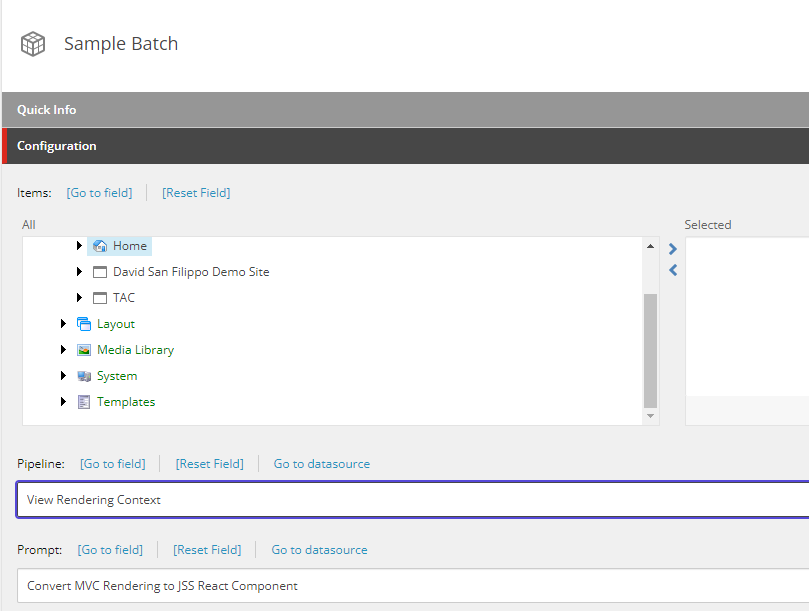

Ultimately, if it did work, I’d like to be able to define a batch configuration that allows you to select the renderings you want to convert and then have it automatically provide a context for each of that and ask ChatGPT to go generate code and finally provide the results for use. I’ve went as far as defining a template to configure it, but need to get the quality of the code generated better before working on that part.

POC Demo Video

Here is a video walkthrough of the POC I created.

Wrapping Up & Next Steps

This is still very much a proof of concept. I’m hoping with improved code generation, better prompt engineering and more Context Processors, this could be a very powerful add on to Sitecore. Any feedback on this approach is welcome. If you want more information, reach out to me. You can find me on LinkedIn, Twitter or fill out our contact form.