Even though 98% of websites use JavaScript, many developers have difficulty optimizing them for search engines. This makes JS SEO skills crucial for modern web developers and marketers.

Whether you’re seeking a fresh perspective on SEO and JavaScript or aiming to build high-ranking websites, this guide is your essential knowledge base. We’ll explore why this combination is crucial for both search engines and users, how crawling and indexing work for JS platforms, and dive into JavaScript SEO best practices.

What is JavaScript?

JavaScript is a universal programming language primarily used for client-side scripting in web browsers. Unlike languages like Python, Java, and C++, JavaScript excels in creating dynamic and interactive web content. It powers everything from animated graphics and interactive maps to real-time updates without requiring page reloads.

What is JavaScript SEO?

JavaScript SEO involves optimizing JavaScript-heavy websites to ensure they are crawlable and indexable by search engines like Google. Techniques include using unique URLs for all pages, implementing long-lived caching, and optimizing content for faster loading. JavaScript SEO addresses challenges like minimizing the risk of JavaScript issues and optimizing content for better search engine performance.

How Does Google Crawl and Index JavaScript?

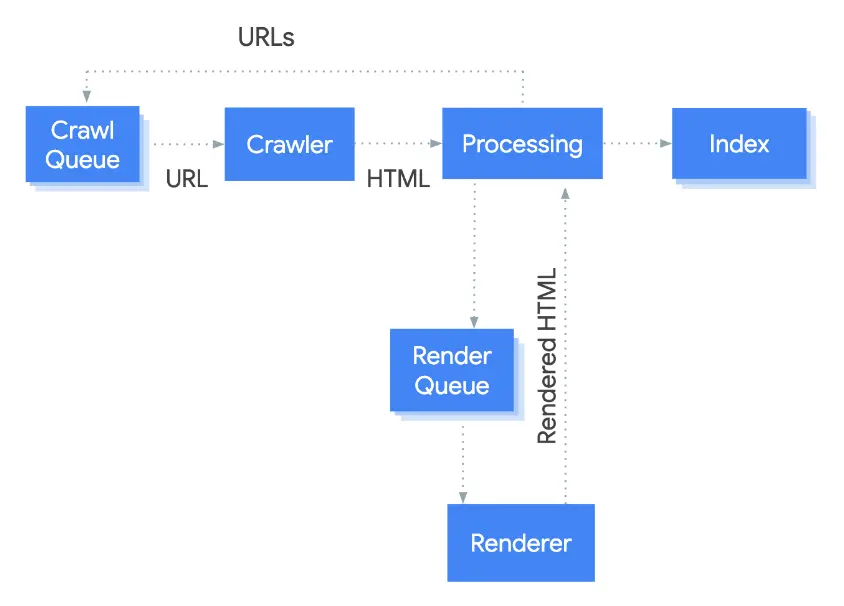

Google processes JavaScript in three main stages: crawling, rendering, and indexing.

Source: Google Search Central

- Client-Side Rendering (CSR): This increases content interactivity but may not be SEO-friendly by default.

- Server-Side Rendering (SSR): Rendering occurs on the server, which can improve SEO for static content.

- Dynamic Rendering (DR): Real-time code rendering, suitable for dynamic content updates.

While it works perfectly in theory, this procedure is never guaranteed in practice. JS platforms may be lacking in terms of SEO friendliness and block Google from effectively reading and indexing its pages.

Why is JavaScript Good for SEO and Users?

JavaScript frameworks like React, Angular, and Vue.js enable the creation of dynamic and interactive web pages, which can significantly enhance user experience and engagement. When properly optimized, these pages can achieve excellent results in search engine results pages. Here are a few reasons why JavaScript is beneficial:

- Dynamic content: JavaScript allows for dynamic updates and interactive elements on web pages, which can keep users engaged.

- Efficient code: These frameworks generate clean, efficient code, supporting fast load speeds and improving user experience.

- Mobile optimization: JavaScript frameworks support various mobile optimization techniques, enhancing performance on mobile devices.

- Microdata integration: Frameworks like React and Angular allow for the integration of schema markup, which helps search engines understand and rank content effectively.

How to Make Your JavaScript Content SEO-Friendly

JavaScript and SEO aren’t a perfect combination by default. However, with some effort, you can optimize your JavaScript content for search engines. Here are a few strategies:

Optimize JavaScript Loading

Boost website speed and optimize JS code to improve user experiences and contribute to the Core Web Vitals’ metrics:

- Find out how you can reduce JS files without compromising overall page performance. Consider the benefits of GZIP compression in this case.

- Customize JS delivery. Optimize JavaScript delivery by loading scripts only when needed, compressing JS, and preloading critical scripts.

- Eliminate unused code and reduce the size of the final bundle (use tree shaking to get rid of dead JS code).

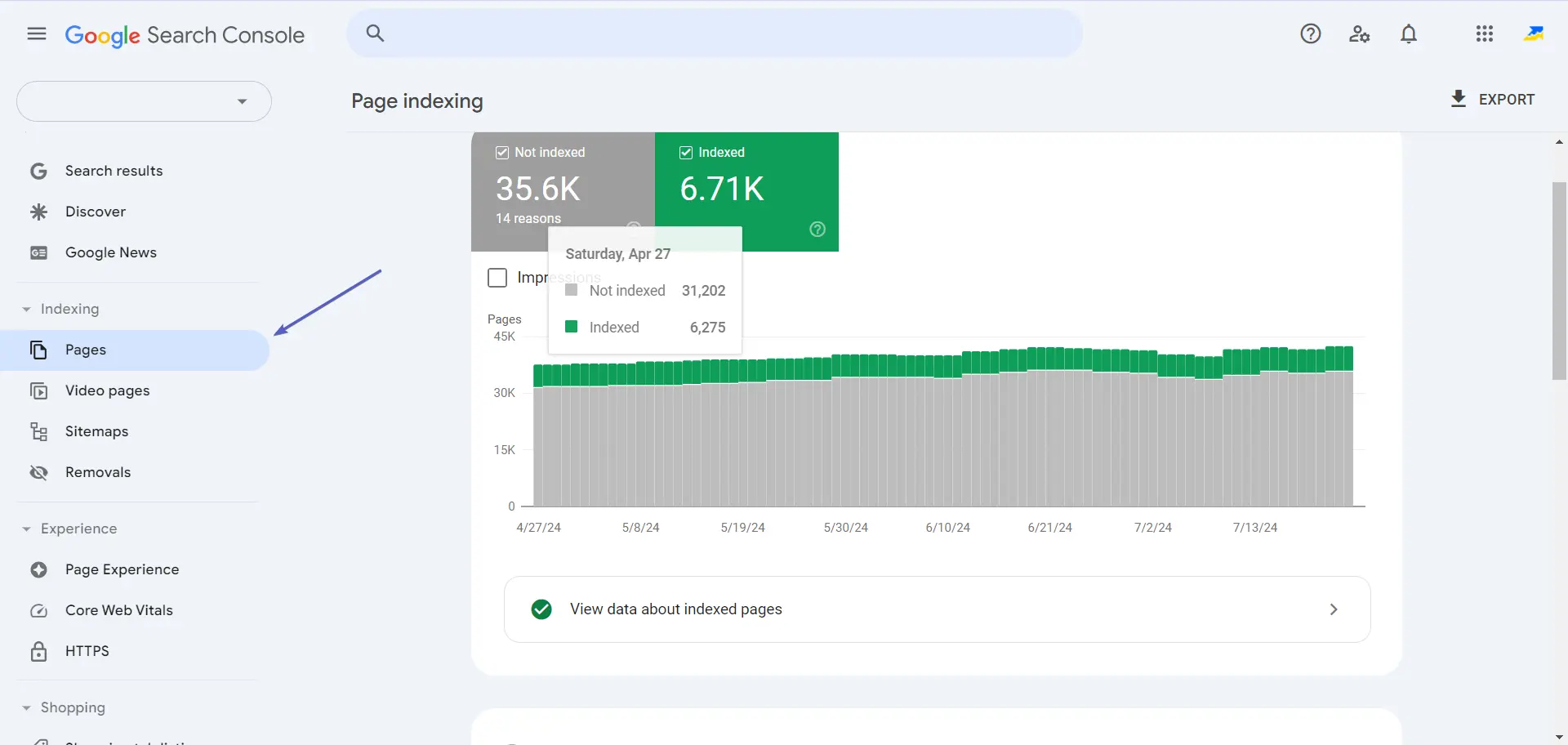

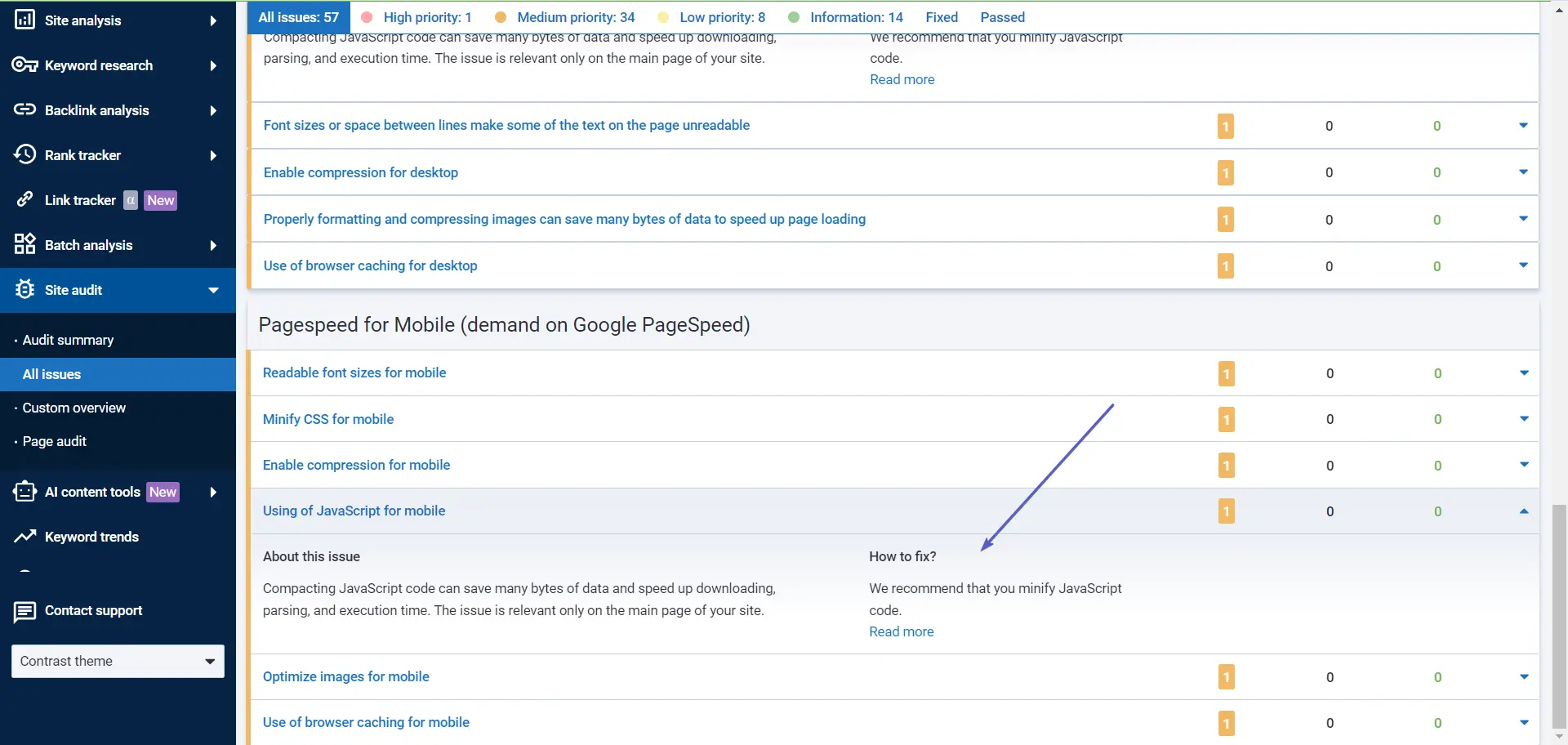

You can use Serpstat site audit to identify such growth points:

Now, our SEO platform can easily render JS websites, as well as J-SON LD markup.

Prioritize Rendering Critical Content

Optimizing the Critical Rendering Path (CRP) reduces the time needed for search engines to analyze and render pages. Key components include:

- Document Object Model (DOM)

- CSS Object Model (CSSOM)

- Render Tree Combination

Minify resources, compress files and defer non-essential JavaScript to improve CRP efficiency.

Implement JavaScript SEO Techniques

Understanding how search engines crawl and render JavaScript pages helps you create a well-structured site. Use tools like Serpstat, Netpeak Spider, or Screaming Frog to audit your JavaScript pages and identify issues early.

JavaScript SEO Issues

To ensure your JavaScript content is SEO-friendly, regularly run technical audits to spot and fix potential problems:

- Blocked resources. Ensure CSS, JS, and other important resources are not blocked in the robots.txt file.

- Proper link. Use proper < a href > links to make sure pages are indexable.

- Avoid hash URLs. Googlebot won’t crawl pages with hash-containing URLs as separate pages.

- XML sitemap. Include all important sections in your XML sitemap.

- Server-side redirects: Use server-side HTTP redirects (301 or 302) instead of JavaScript redirects.

Errors in JavaScript and pages that demand end-user interaction for processing needs lead to non-crawlable URLs. One of the ways to solve the problem is to rely on SEO efficiency. Meta tag optimization, static generation, server-side rendering, and structured data implementation are great solutions. Creating a page’s logical structure and JS debugging will also work.

Best Practices in JS SEO

Now that you understand how Google handles JavaScript websites, it’s time to apply best practices to improve the SEO of your dynamic content:

- Include essential content in HTML: Ensure the initial HTML response includes content like metadata and titles.

- Use structured data: Enhance the visibility and understanding of your content by search engines.

- Use content hashes: Use content hashes for proper rendering and indexing.

- Create unique URLs: It helps bots understand and navigate your site structure more effectively, ensuring all content is discovered and indexed.

- Respect canonical links: Avoid overwriting canonical links, rel=”nofollow” attributes, and meta robots directives with JavaScript.

- Implement lazy loading: Use lazy loading and code splitting to optimize JavaScript delivery.

- Regularly Audit your site: Use tools like Serpstat Audit to monitor your website health at least once per week.

Conclusion

Optimizing JavaScript for SEO requires a blend of technical knowledge and best practices. By following the strategies outlined above, you can enhance the performance, crawlability, and indexability of your JavaScript-heavy websites, ensuring they rank well in SERPs and provide a superior user experience.

FAQ

Yes, but it requires proper setup to ensure Googlebot can crawl and index JavaScript content effectively.

Google can crawl and index content generated by JavaScript-based frameworks like React, Angular, and Vue.js. However, these frameworks require specific SEO strategies, such as server-side rendering (SSR) or static site generation (SSG), to ensure search engines can effectively process and index the content.

Rendering in SEO involves processing HTML, CSS, and JavaScript to create a visual representation of the page as a user would see it. This is crucial for search engines to understand and index dynamic content accurately.

Googlebot uses rendering to ensure it captures the full content and layout of a page, including any dynamic elements loaded via JavaScript. This rendered content is then used in the indexing process to determine the relevance and quality of the page’s content, impacting its ranking in SERPs.

Improving the SEO of JavaScript content involves several key practices:

- Implement server-side rendering (SSR) or dynamic rendering to make content accessible to search engines.

- Optimize JavaScript loading by minimizing file sizes and eliminating unused code.

- Ensure proper use of HTML tags, metadata, and structured data to enhance search engine understanding.

- Regularly audit your site with tools like Serpstat, Google Search Console, and Screaming Frog to identify and fix any issues.