For a few years now, Google has made their own editorial decisions regarding how they represent sites in their results. They have been rewriting titles in their results and even including sites that have been blocked from indexing. Google’s example site for this was a Department of Transportation site that may have accidentally been blocked by the site owner using the robots.txt file. However, because Google determined that this was a mistake by the site owner, Google still included the site but did so with a modified display of the site that included a note to the user about why the site was blocked.

Over the past 5–10 years, I have seen Google increase the frequency of its decisions to rewrite site titles. In some cases, I think it does improve the user experience, especially for sites where the title is automatically generated (such as e-commerce product pages) and content from database-driven libraries (for example, press releases). Often, these types of sites populate their title tag with a value taken directly from the database, which may not be ideal because this value may not contain the keywords people are searching for, or the title may be too long. There are several odd issues that may arise from populating title tags and headings straight from the database; I will dive further into these issues later in this blog post. First, I want to go through the mid-August title update and discuss how you can identify pages that require further investigation.

Google Title and H1 Display Update

In mid-August of this year, Google made some adjustments to the way they display the title of a page in their search results. Essentially, Google determined that title tags have become a bit spammy and not very valuable to users, so they decided to use the H1 tag more often because they determined it is usually written for people to read.

Since this change went live, some site owners found that when the title and H1 tags differed, with the target keywords or the call to action only contained in the title, the click-through rate (CTR) from the search results declined—sometimes by over 30%. Such a decline can be a major issue for sites that rely heavily on organic traffic, resulting in a significant impact on the site’s revenue.

Gather and Analyze Data from Google Search Console

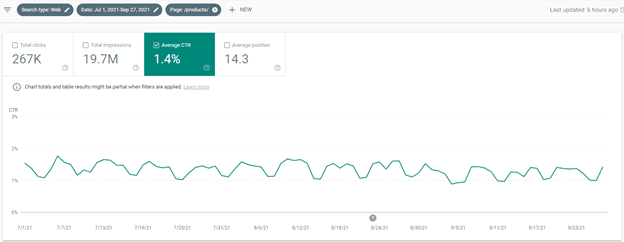

If you are curious about how your site might be affected, you can use Google Search Console to check the CTR of either an individual URL or specific URL groups. It is unlikely that your homepage will have changed significantly for branded queries, so you can use the Query filter to filter out your brand, then narrow your search further by adding Page queries for specific URL patterns. From what we at Vizion have seen so far, the deep pages on a site are most likely to have been affected.

These results will give us the overall trend to understand what else to look for. If we want to quantify a specific change and troubleshoot it further, we can download the data for the first week of August and the first week of September and put both sets of data into a spreadsheet.

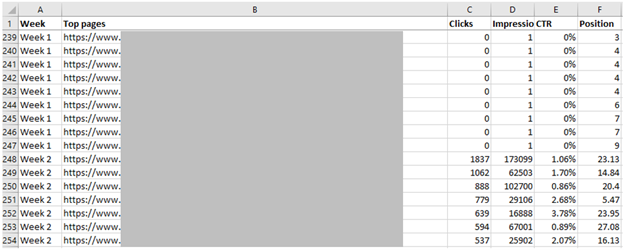

Create a new column and populate it with “Week 1” for the rows of URL data for the first week of August. Now paste in the data for the first week of September and populate the “Week” column with “Week 2” for each row. This allows us to create a pivot table and group the URLs.

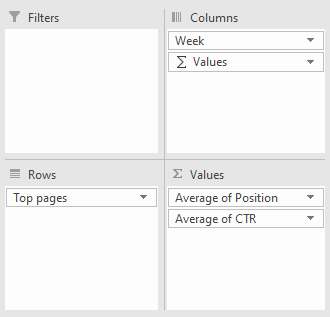

Select “Top pages” for rows and “Week” for columns, and include “Average Position” and “Average CTR” for values:

Now, in the pivot, copy columns A–E and paste them as values into a new tab. This will help us manipulate the data more easily than being confined to the sometimes-odd quirks and restrictions of the pivot table.

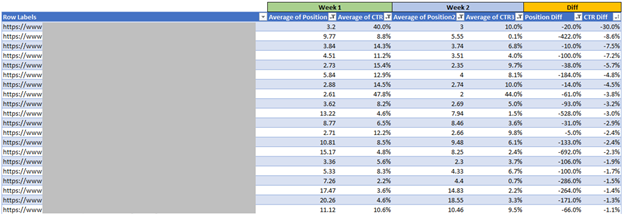

Next, add columns to calculate the difference between the “Position” and “CTR” columns, remove empty rows, sort by smallest CTR, and filter the new “Position Diff” column with values less than 0. Your resulting table should look something like this:

This table highlights pages where the average rank has increased, but the CTR has decreased. These are the pages we want to investigate further because CTR tends to increase with an increase in position.

Now you can start to explore patterns in the URLs to determine whether a specific product line or template needs to be further evaluated.

This is not a perfect process because a single URL can rank for several queries, and Google may display the title of a URL differently depending upon the query. If you have a subscription to a rank-monitoring tool that collects a screenshot of the SERP for a given keyword, you can use that data to determine whether Google changing the title may be a contributing factor.

Unfortunately, at the time of writing this blog post, the tools I have access to do not allow for bulk downloads of the SERP output, so it is difficult to scale this process beyond checking individual URLs; however, once you have checked a few, you will start to notice patterns quickly.

Optimizing the Title and H1 Tags

The specifics of word order and whether to include the brand will vary depending on the brands you are selling, the products you are selling, and how people search. There is often a series of best practices in crafting the title and heading tags based on knowledge about your audience.

For example, a B2C site may need to include color or size dimensions, whereas a B2B site may need to include part numbers or SKUs.

Often, the challenges we face in optimizing title and heading tags is not found in the handcrafted values for blog posts and unique content but rather in the larger product catalogs and e-commerce sites.

If we further assess how to semi-automate or fully automate the optimization of these values, there are generally four phases of optimization ranging from no optimization to advanced ETL, which includes complex preprocessing and normalization of data, to ensure titles and headings are optimized:

- No optimization

- Template substitution

- ETL

- Advanced ETL

Phase 1 – No Optimization

As I mentioned at the beginning of this post, sites often take a value from the database and place it in the title tag or H1 tag, with no—or very few—data validation or substitution rules.

This can be problematic because the data source that populates the database now controls your title tags. What this means is that if the format or the business rules change the way the name of the document is structured, all the title tags for those pages will change during the next database update.

Tip: If you are doing SEO for a database-driven website, ensure you get notified when the database is updated so you can look out for these types of changes.

Phase 2 – Template Substitution

The next phase of optimizing title tags is to add some logic to the page template that will remove certain values and substitute known value pairs.

For example, you may have an e-commerce site that sells products from a variety of brands. Some product names will contain brand names, whereas others will not. By contrast, the product may include a product ID, model or part number, model year, dimensions, colors, or abbreviations that need to be moved or replaced.

I often see abbreviations like “GR,” “BL,” “BLK,” and “WHT” used to represent colors. Unfortunately, we cannot determine whether Google will recognize all these abbreviations and treat them as synonyms. For example, GR could signify gray or green. When searching for these abbreviations, Google shows synonyms for BLK and WHT, but it does not understand others, like PK, GR, and BL—likely because some of these abbreviations may be used differently among various brands or ******.

The format of the product name may also vary among ****** or product groups within the same brand as well as among brands. Accounting for all these rules and their ongoing variations can eventually make a site’s code more difficult to maintain because layers of rules are more difficult to manage and troubleshoot. Moreover, the more logic that is included in the template on the server side, the slower the time to first byte. Although this has not often been a major performance concern, in today’s world of optimizing for Google’s Core Web Vitals, we are now counting and evaluating performance by the millisecond, so working through these template changes as part of a larger performance initiative may arise.

Tip: Using title and H1 tags that are consistently “web-ready” not only makes it easier for search engines to better understand your content but also often helps your own site search engine to index and present the correct results to users.

The benefit to this approach is that you do not have to adjust the ETL or database update processes. Furthermore, it is usually much quicker to make a code change than a database change, owing to other dependencies that are often unknown.

However, if the value for the title is used in multiple places (for example, in an image alt attribute or link text), care may need to be taken to carry the rewriting logic through to those elements.

Phase 3 – ETL

The ETL describes a process database administrators use to extract, transform, and load data into the database. It may encompass data validation and other sanity checks prior to the data being imported. As an SEO consultant, if you can obtain access to these elusive database admin folks and start to understand their ETL processes, it is usually best to perform the value substitution rules during the ETL process (which is done as a batch process and outside of the website visitor’s time).

Phase 4 – Advanced ETL

If you have built a trusting relationship with the back-end developers and database admins and have demonstrated how their work has benefited the company, you can further invest in optimizing the data in the database with increasingly complex rules and logic. This optimization may also include data normalization and ensuring that keyword variations are kept to a minimum or that location references (such as addresses and city names) are cross referenced with a third party (for example, a post office list).

There are many ways to optimize the title and heading tags on a web page; much of this process depends upon knowledge of your audience’s search behavior and how content is managed and imported. SEO consultants are uniquely positioned to connect those dots, bring relevancy to your content, and continue to push your performance

At Vizion Interactive, we have the expertise, experience, and enthusiasm to get results and keep clients happy! Learn more about how our SEO Audits, Local Listing Management, Website Redesign Consulting, and B2B digital marketing services can increase sales and boost your ROI. But don’t just take our word for it, check out what our clients have to say, along with our case studies.