Although Google told us the site reputation abuse policy would go into effect on Sunday, May 5, Danny Sullivan tweeted on Monday evening that the manual actions were currently being handed out and that the algorithmic component was not yet live.

At the time I write this, we have seen the first manual actions. I will share below some thoughts on what we may see with the algorithmic component.

IMPORTANT: If your traffic is down in early May, it may not be related to the site reputation policies. Many sites impacted by the March Core Update are seeing further ranking changes. Your losses are quite possibly related to the core update changes rather than the reputation abuse penalty. Here’s my algo update list which should help more.

There are lots of early reports from the community of changes. We don’t know whether these sites were given manual actions to deindex these pages or whether they removed them themselves to avoid penalization.

-

Many large news websites are no longer in the top 10 for coupon terms they used to hold. (Laura Chiocciora, Glenn Gabe, Andrew Shotland, Lily Ray, Carl Hendy, Aleyda Solis, Glen Allsopp)

-

Sports Illustrated’s showcase directory which focused on wellness products is gone. (Vlad Rappoport)

-

The changes may not have affected Europe and Asia yet. (Sjoerd Copier)

-

Carl Hendy noted that in a sample of 2500 searches for coupon queries in Australia and the UK and just a couple of brands dominate. These will be interesting sites to watch.

-

“Parasite” directories from some well known sites like Outlook India, TimesUnion, and SFGate are disappearing. (Vlad Rappoport)

-

Lily Ray reported a case where a publisher received a manual action despite the fact that they have an in-house coupon team that sources coupons directly.

Let’s look at an example

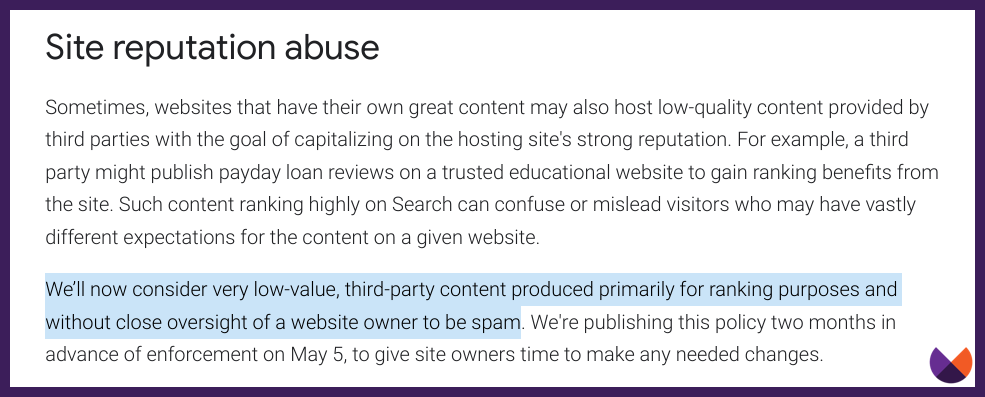

There is a lot of discussion about whether it’s fair for Google to decide if news sites can make money from coupons. Part of the wording in the spam policies is, “A news site hosting coupons provided by a third-party with little to no oversight or involvement from the hosting site, and where the main purpose is to manipulate search rankings.” (This update is not just about coupons…there’s more to it as well.)

This manual action should be for situations where a business outside of the news organization is renting space on their website to use their authority to rank for coupon terms.

First, we’re going to look at why this manual action is given. Then I’d like to share my thoughts on the ethics of penalizing sites for having coupon subdomains.

Old Navy Coupons – LA Times’ page removed

For example, a search for “old navy coupons” used to show the LA Times at position 2 or 3. We can see according to Ahrefs’ estimates that they started to lose rankings coincidental with the rollout of the March 5, 2024 Core Update.

Today, this page does not rank at all. It is still in the index, however.

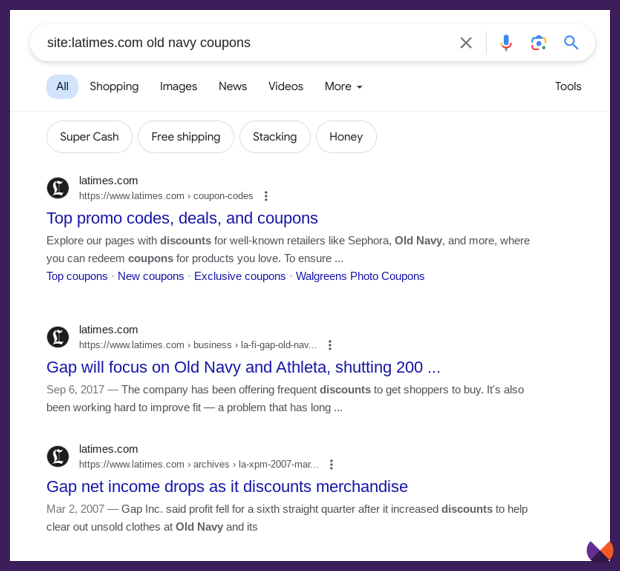

If I search for site:latimes.com old navy coupons, I see their main coupon page, and I see stories in the LA Times about Old Navy, but not the /old-navy coupon page that used to rank.

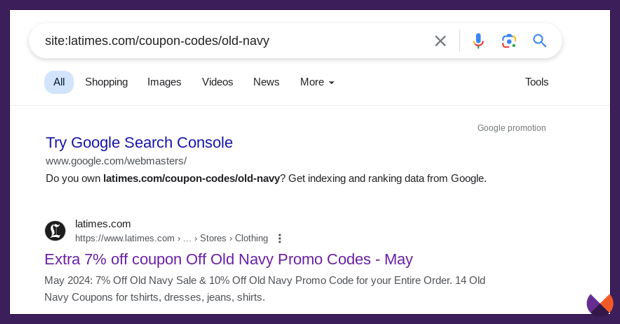

But if I do a site: search for the page itself, it is indeed in the index.

It’s indexed, but not ranking. This means that a manual action is likely. Manual actions can deindex pages, but they also can cause severe ranking suppressions which is likely the case here.

So why did the LA Times get penalized? Isn’t it their choice whether they show coupons to searchers? What are they doing wrong?

Why were the LA Times penalized?

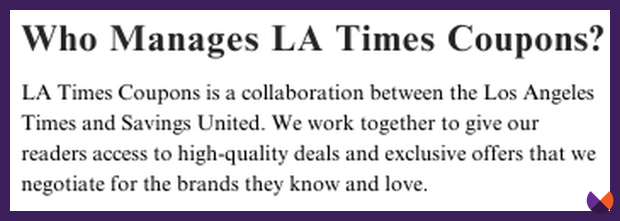

The problem in this case is because it’s not the LA Times that runs this coupon site. Rather the coupons pages are a collaboration between the LA Times and Savings United.

It’s worth reading the LA Times’ page on coupons. It describes that they partner with Savings United. This team vets and sources the coupons. They have relationships with specific brands like Home Depot and Priceline to provide coupons for their visitors.

Here’s more info on Savings United. Their about page says they are a “leader in couponing.” They have relationships with the LA Times, The Washington Post, The Telegraph, Independent, Time and more.

Google told us they’d be taking action on sites that provide third party content with the goal of capitalizing on the hosting site’s strong reputation in their March Core update information.

Is the content very low-value? That’s difficult to judge. For me, at least. Not without more investigation.

Is it third-party content produced primarily for ranking purposes and without close oversight of the website owner (the LA Times)? Yes. This content was produced by Savings United, who made a deal with the LA Times to provide them with coupons.

Who is Google to tell the LA Times they can’t have a coupon section?

This action will have a devastating impact on several businesses. Savings United says the coupon and comparison market is worth over $90 billion. I expect that these pages make millions for all parties involved, mostly from their performance in organic search.

This act by Google likely impacted jobs and revenue for many. It is another blow to journalism as well as these relationships provided significant income.

Google is certainly not telling publishers they can’t have agreements and they can’t publish coupon pages. They can publish them for their audience. But, when Google asks in their helpful content documentation, “Is the content primarily made to attract visits from search engines,” I believe the answer here is yes.

The problem here is that the reputation of the publisher is helping these pages rank far better than they should.

Let me share my analogy about the Librarian. It changed how I think of Search. And I think it will help explain my thoughts on what is happening, and perhaps about to unfold as we see the algorithmic component of this update.

A searcher walks into a Library. Can I have some books about Old Navy Coupons?

Google’s mission is to organize the world’s information and make it universally accessible to all. Each page on the web is like a book in the library that a searcher can check out. For years, we have worked hard to understand what it takes to get the Librarian to recommend our books. The Librarian hasn’t memorized everything in our books. Rather, they know the title, the headings, the topics discussed, and a few other things – including recommendations from other people about this book. (links) The Librarian uses all of this information to decide how to order each of the books in the pile they recommend to a searcher.

Google’s mission is to organize the world’s information and make it universally accessible to all. Each page on the web is like a book in the library that a searcher can check out. For years, we have worked hard to understand what it takes to get the Librarian to recommend our books. The Librarian hasn’t memorized everything in our books. Rather, they know the title, the headings, the topics discussed, and a few other things – including recommendations from other people about this book. (links) The Librarian uses all of this information to decide how to order each of the books in the pile they recommend to a searcher.

Google’s algorithms are math equations to create a ranking score that started off using just a few signals, the most important of which was PageRank – links – the recommendations of others. As Google developed its machine learning systems, they learned to use more of the signals available to them.

It turns out that while PageRank is still a good signal to use to help predict what’s likely to be helpful, there are many others that can be used as well. If you haven’t yet dug into Google’s Life of a Click presentation, here’s a good place to start when learning about how user data can be turned into signals that help indicate whether a search has been helpful.

Librarian: “OK, so, we’ve got this book which is from Old Navy itself…and then we’ve got Groupon. Lots of people like Groupon and find this useful. There’s this one from the LA Times. And also….”

Lots of people likely choose the LA Times book. After all, it’s the LA Times. And people likely use the coupon and find it helpful. This produces signals that the Librarian can use in their decision on what to recommend for future searches like this.

The thing is, searchers are much less likely to have chosen this book if the Librarian told them it was from Savings United.

By ranking this third-party run coupon page highly, Google is sending the user to something they did not expect. The Librarian recommended a book with the LA Times on the Cover, but inside was content run by a company that was not the LA Times, but has a financial arrangement with them.

Predictions on the upcoming algorithmic action

Danny Sullivan says the algorithmic actions are coming.

It’s possible that this could be the start of a significant change.

I have been saying for years that links are losing their importance in Google’s rankings. With every update, the machine learning systems learn to use more of the world’s information in their decision making.

In February of 2024, Google announced a new breakthrough in creating AI architecture. Gemini 1.5 uses a new type of Mixture of Experts architecture which makes machine learning systems much more efficient even when handling vast amounts of data. While Google doesn’t specifically mention that this technology has been used to improve search, I don’t see how it would not. Search runs on multiple deep learning systems including RankBrain, RankEmbed BERT and DeepRank. Vector search is the technology behind Google Search. These systems were all likely improved with this architecture.

Google told us the March Core update marks an evolution in how they identify the helpfulness of content.

If my theory is true, then Gemini 1.5’s new architecture greatly improved Google’s ability to use the entirety of the signals available to them and determine what is likely to be helpful to a searcher. This would mean that content that has done well based on link building will suffer. Instead of using links to measure reputation, the new systems would be able to use a multitude of signals that could indicate whether content is likely to be helpful. (Why the manual action then, if algorithms can determine authority? Manual actions are given out when algorithms can’t do the job Google wants. In this case, I suspect that the new signals that indicate reputation would still rank these coupon pages highly – possibly even more highly than they currently do, because of the reputation of the LA Times.)

Google told us that in 2022 they began tuning their ranking systems to reduce unhelpful content in Search.

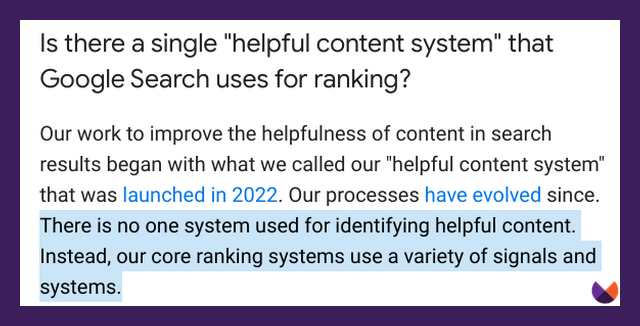

The old documentation for the helpful content system tells us they created a system that generates a signal that can be used in search. This was a signal that indicated whether or not a site tends to have helpful content. If not, the entire site could be demoted. The new documentation says there’s no longer one system or signal, but instead a variety of signals and systems understand the helpfulness of individual pages. And also yes, some site-wide signals are still considered.

If I’m right, then it may be much more than the coupon industry that sees significant losses as the algorithmic component of the site reputation abuse policies are enforced. We may find that a lot of content that has ranked well because of links does poorly.

We are in for some interesting times.

Also, if you follow the belief that Google runs algorithm updates when I travel, next week should be interesting as I am not only traveling, but traveling TO Google as I’ve been invited to Google I/O.

More reading

The site reputation abuse documentation

My thoughts on the changes the Google’s systems introduced with the March Core Update.

Where to find me

Stay up to **** with my weekly newsletter.

Join my community discussing Search and AI in the Search Bar